Area Under the Curve

The area bounded by the curve, the axis, and the boundary points.Tests Using the TruEra Web App¶

Under the Artifacts in the left-side navigator, click Test Harness. If there are currently no model tests defined, you'll receive the following message.

From here, you can:

Each option is covered next, in turn.

Creating Model Tests¶

To create a new model test, click on the Add new test button.

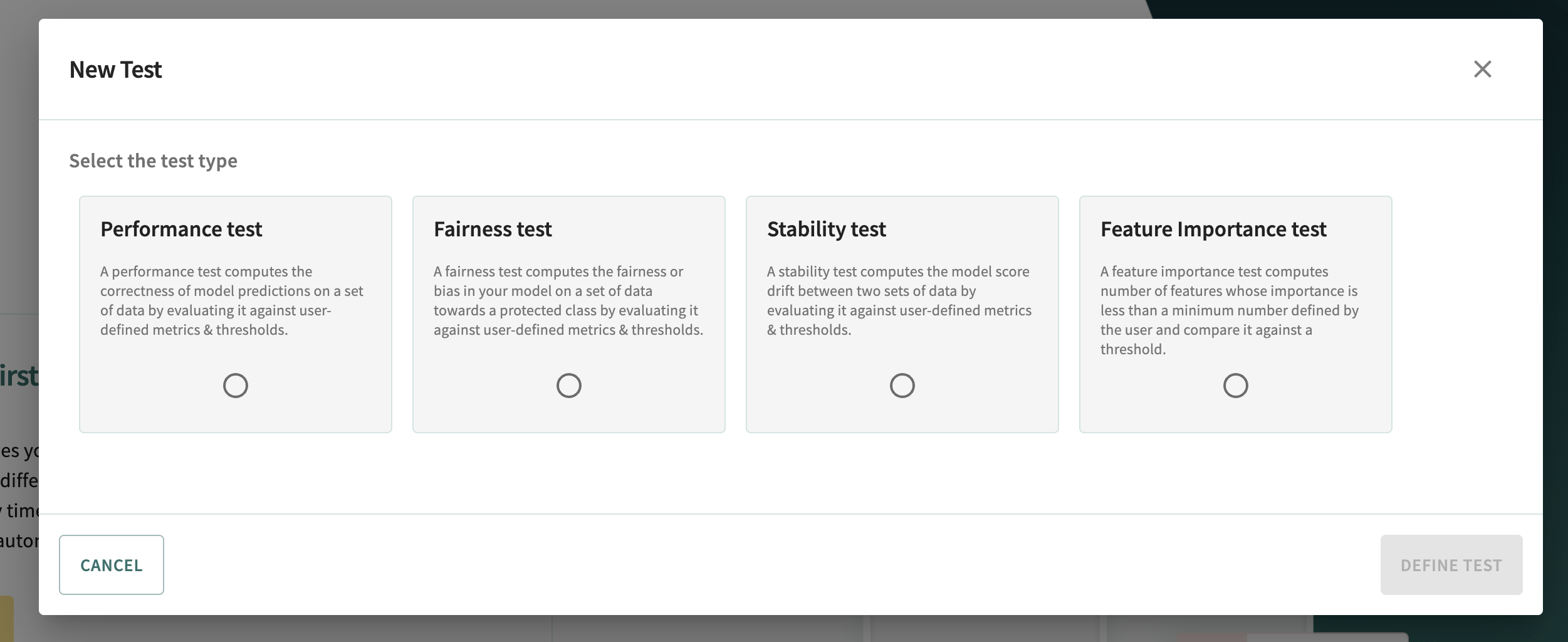

Select the type of test you wish to define, then click DEFINE TEST.

Performance Tests¶

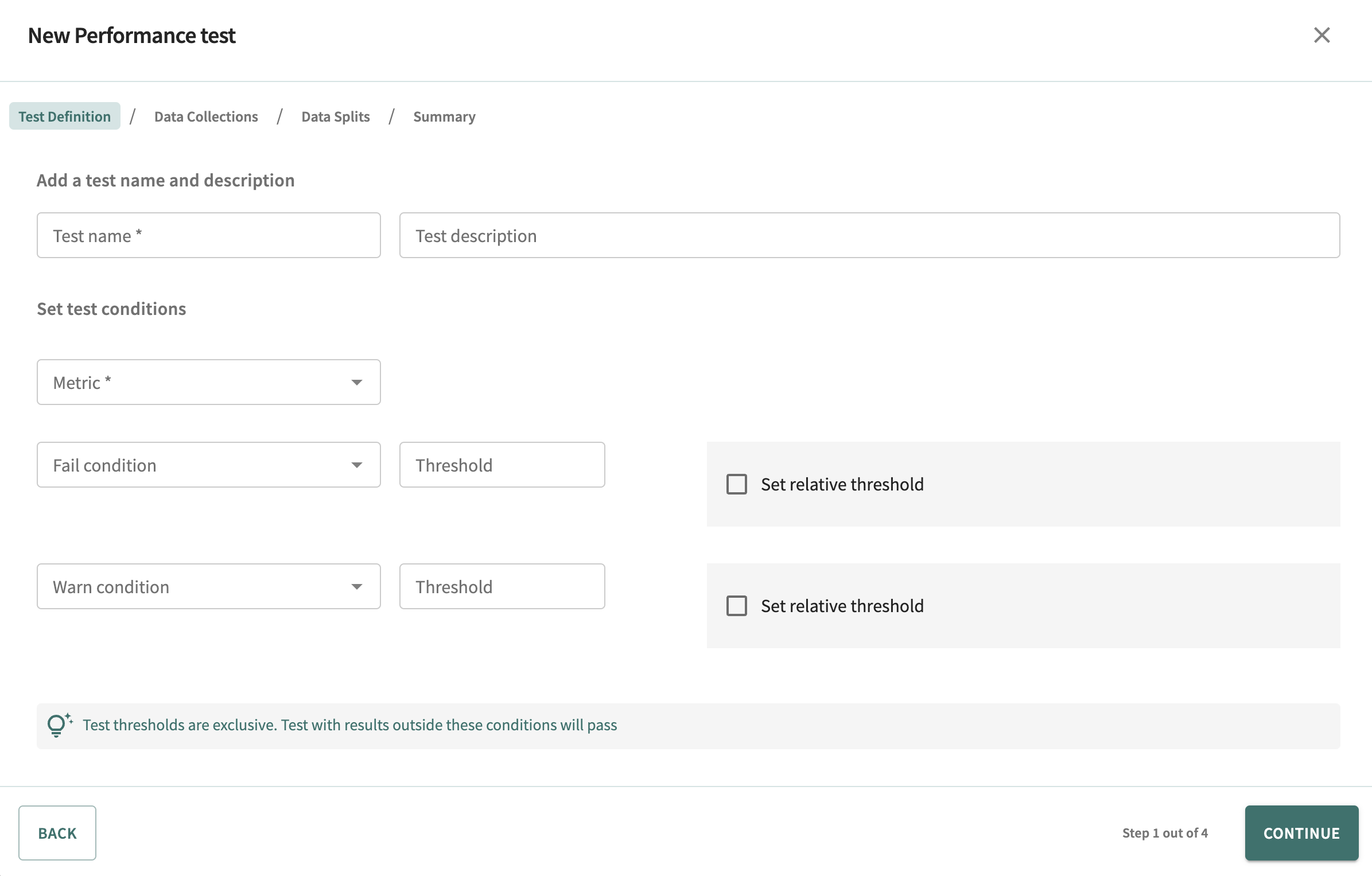

Upon selecting the Performance test option, the performance test creation wizard is displayed.

Enter a name for the test, an optional description, then select the performance metric on which to measure the results.

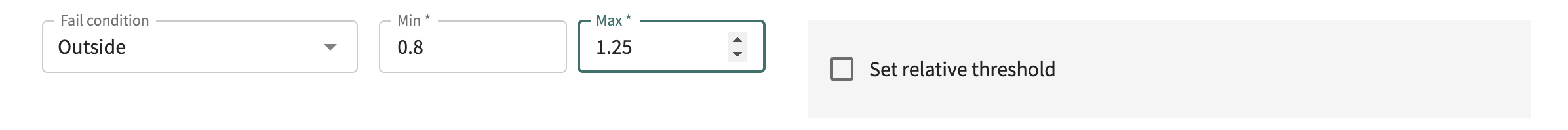

Absolute conditions are specified by selecting a condition, then adjusting the threshold accordingly.

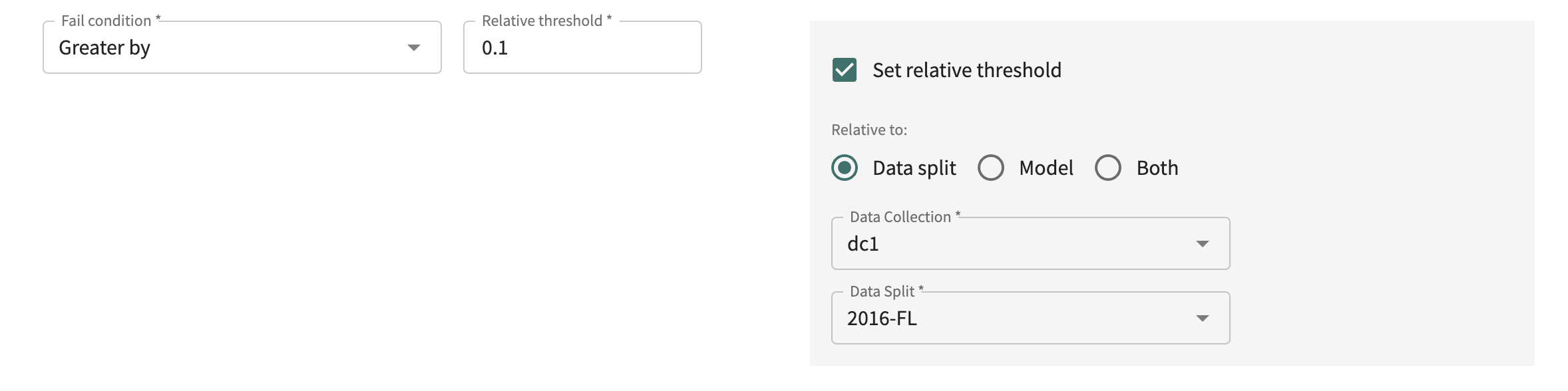

Relative thresholds are specified by selecting the Set relative threshold option, then specifying the relative baseline split or model setting for the test.

Defining Test Conditions

When a condition is defined relative to a split or a model only, the available data collection for testing is restricted to the data collection currently associated with the model. Selection is not restricted in cases for which an absolute condition is defined or the condition is relative to the performance of the model on one of its splits.

Click CONTINUE to select the data collection(s) on which the test will run.

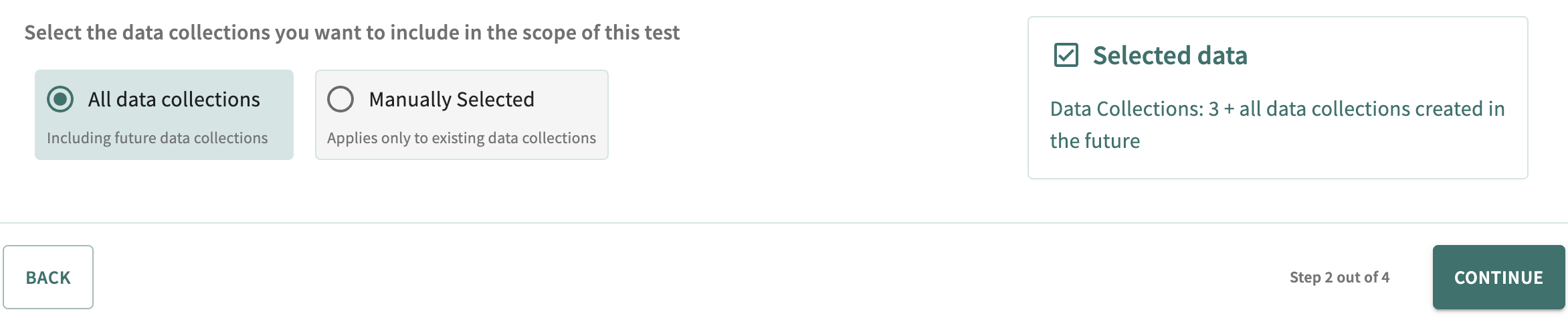

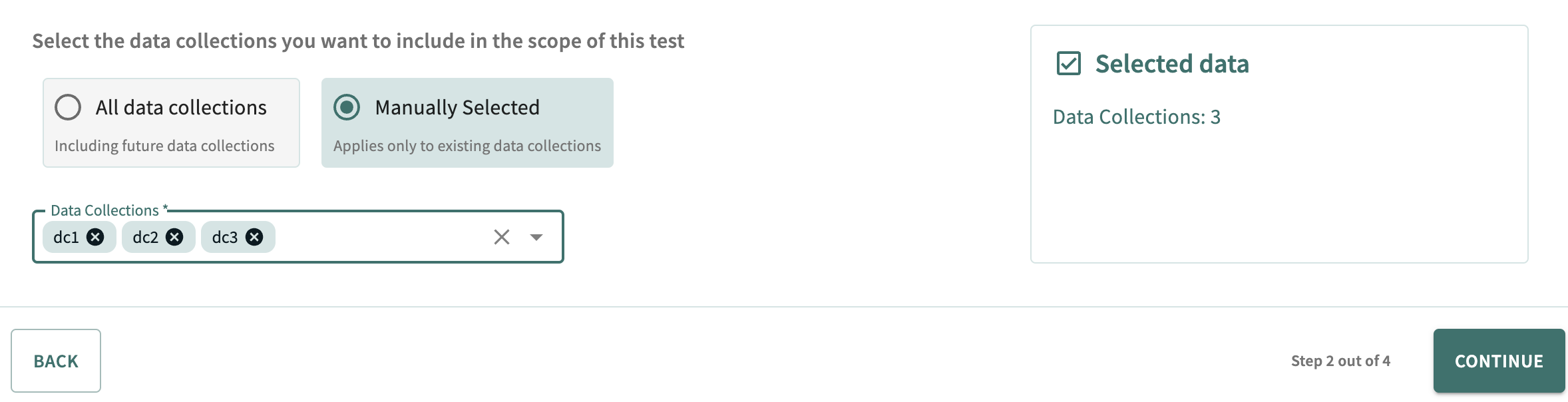

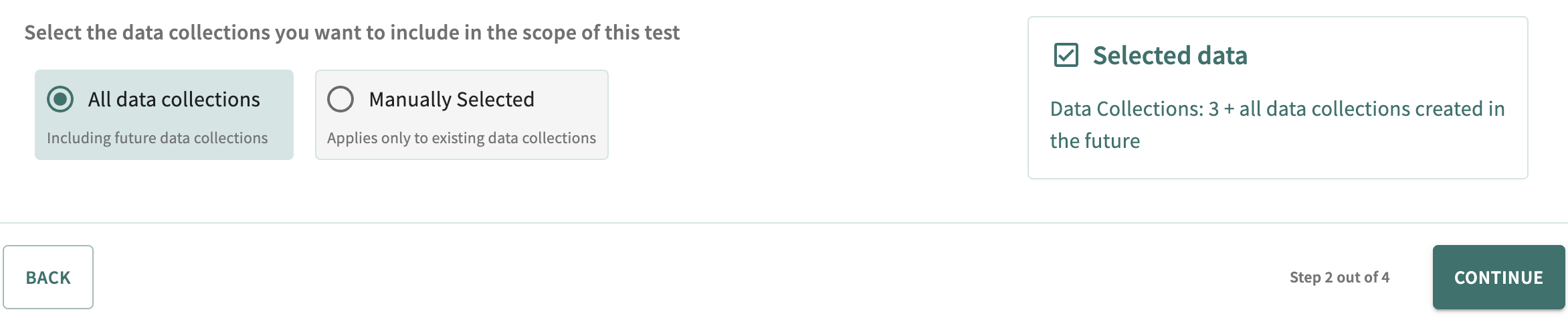

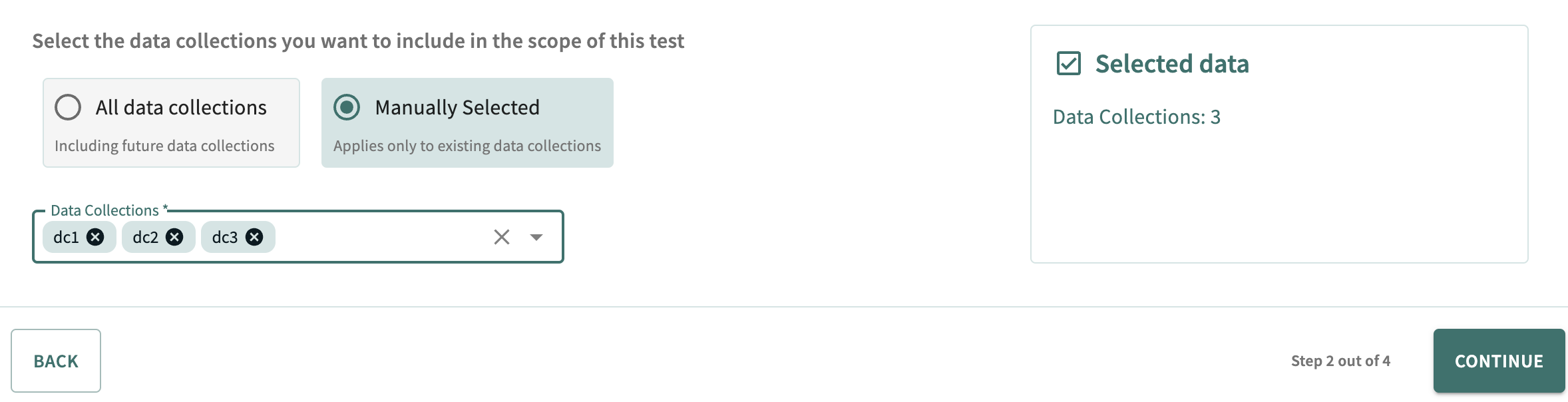

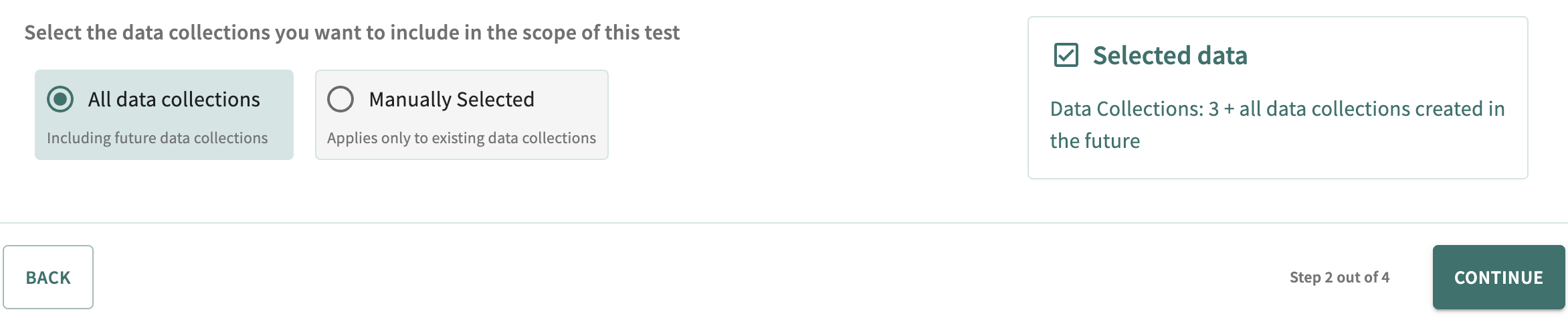

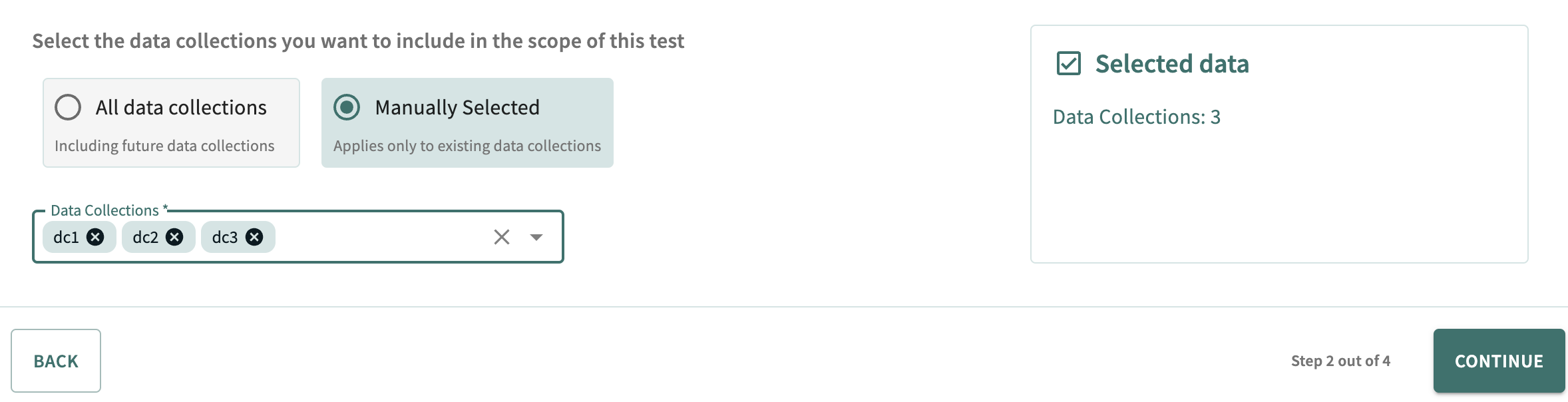

Select All data collections to run the test on all available data collections — those currently existing and those defined later — with respect to the splits/segments configured next. Choose Manually selected to select specific data collections for testing.

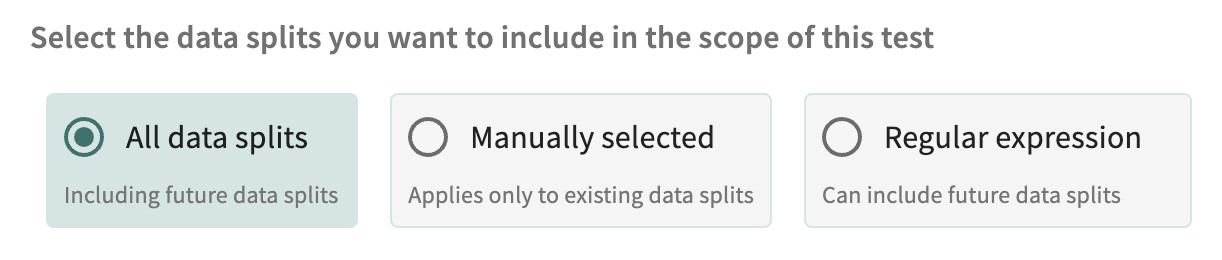

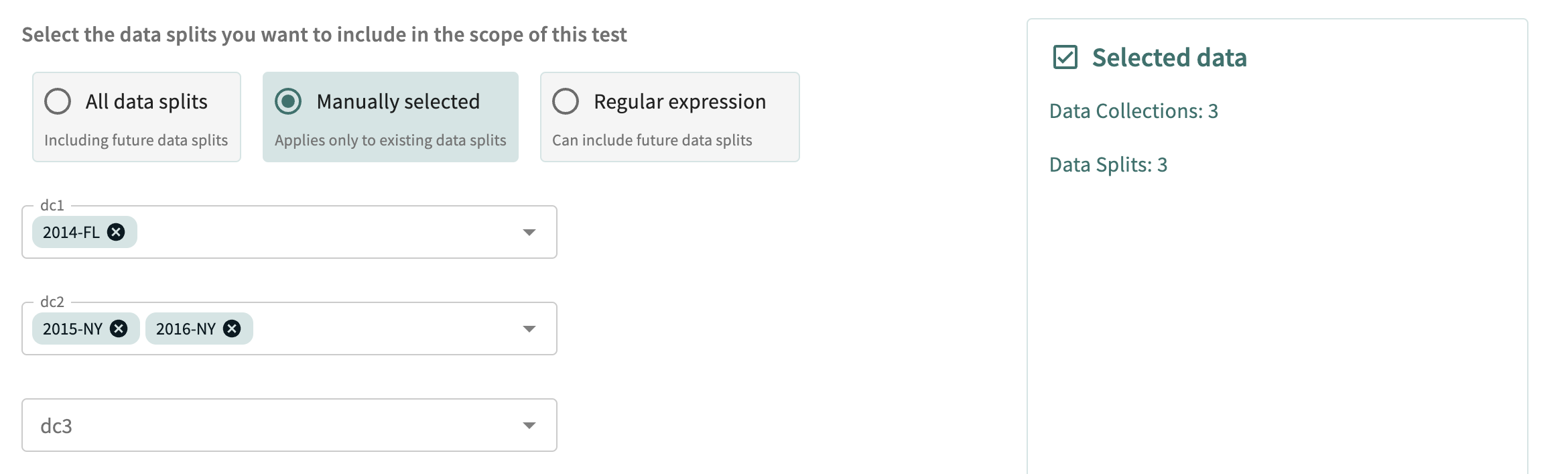

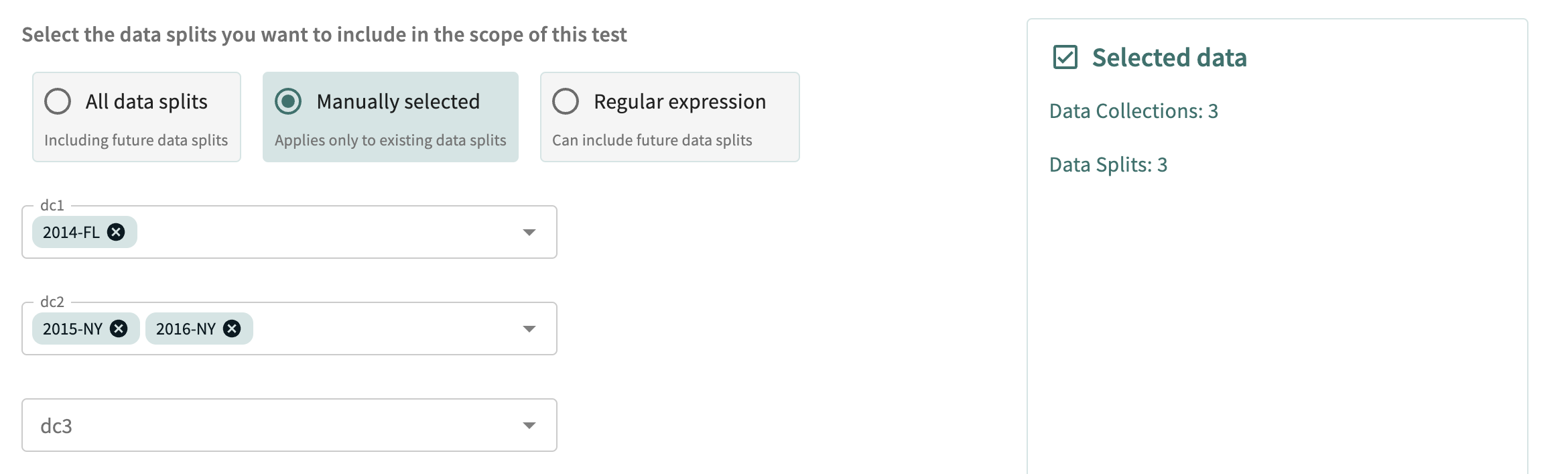

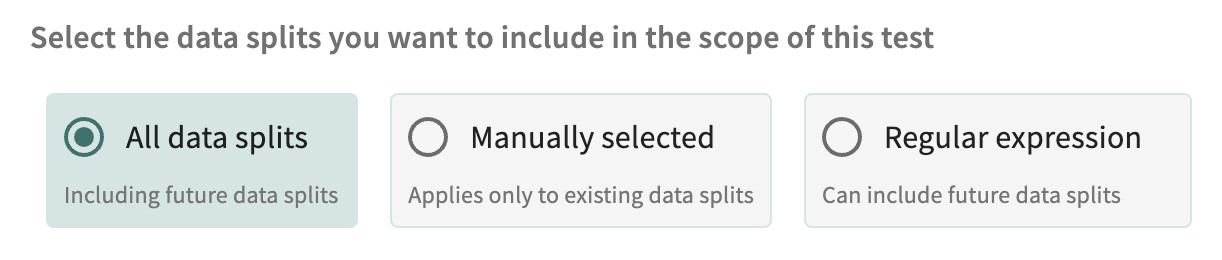

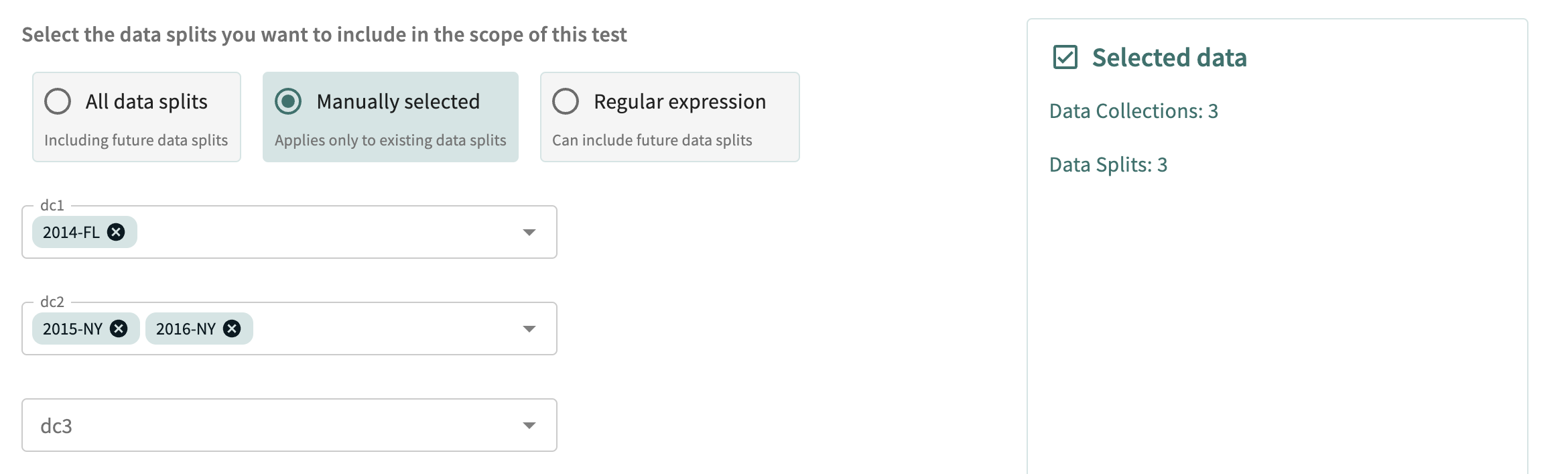

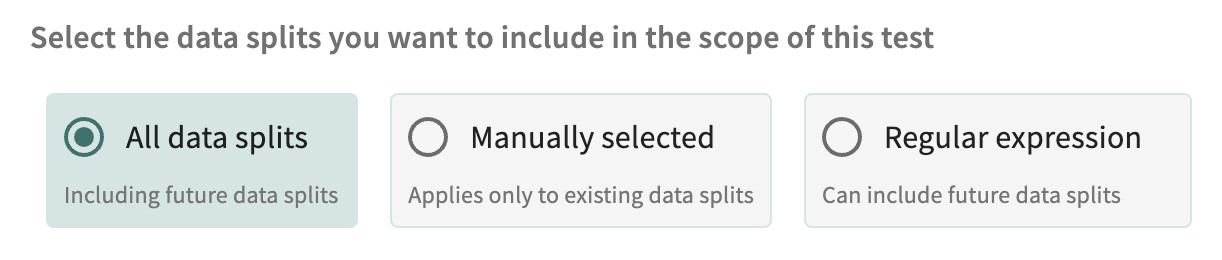

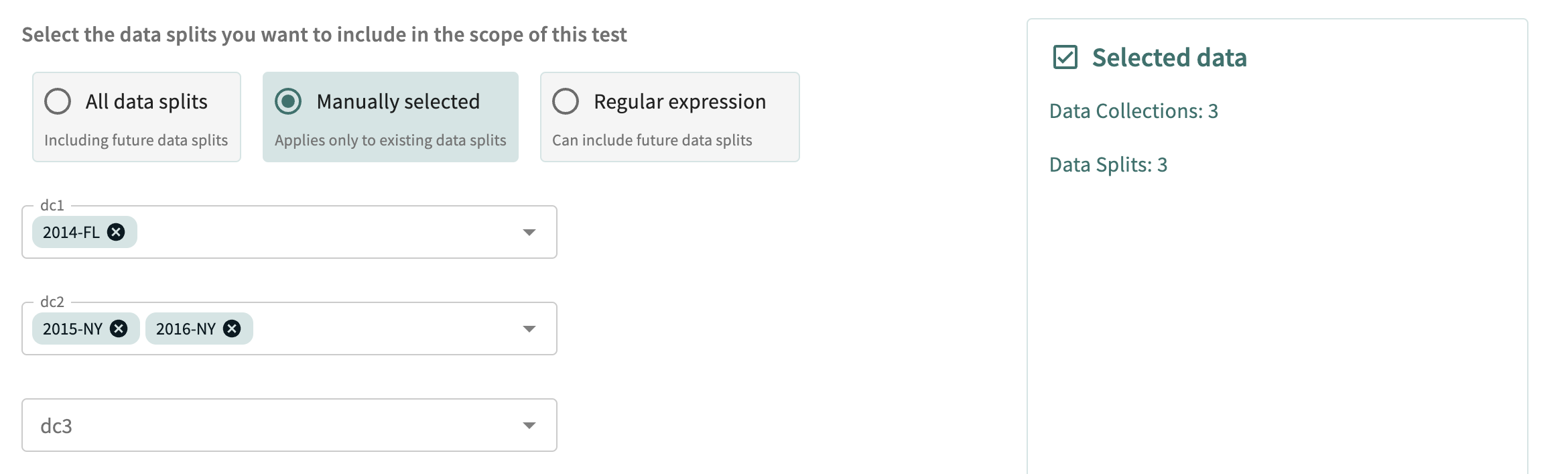

Click CONTINUE to select the test data split(s) and segment(s) with respect to previously defined data collections. Three options are available:

- All data splits

- Manual data splits (not available if you selected All data collections above)

- Data splits matching a regular expression.

Select All data splits to run the test on all data splits, both existing and subsequently defined. Select Manually selected to limit testing to the data splits manually chosen.

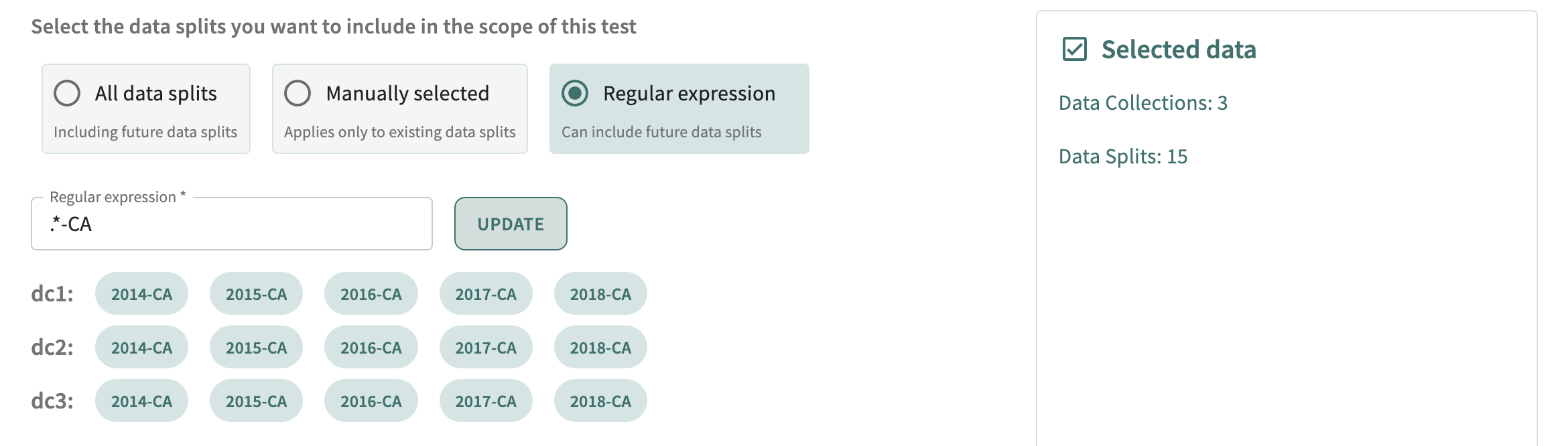

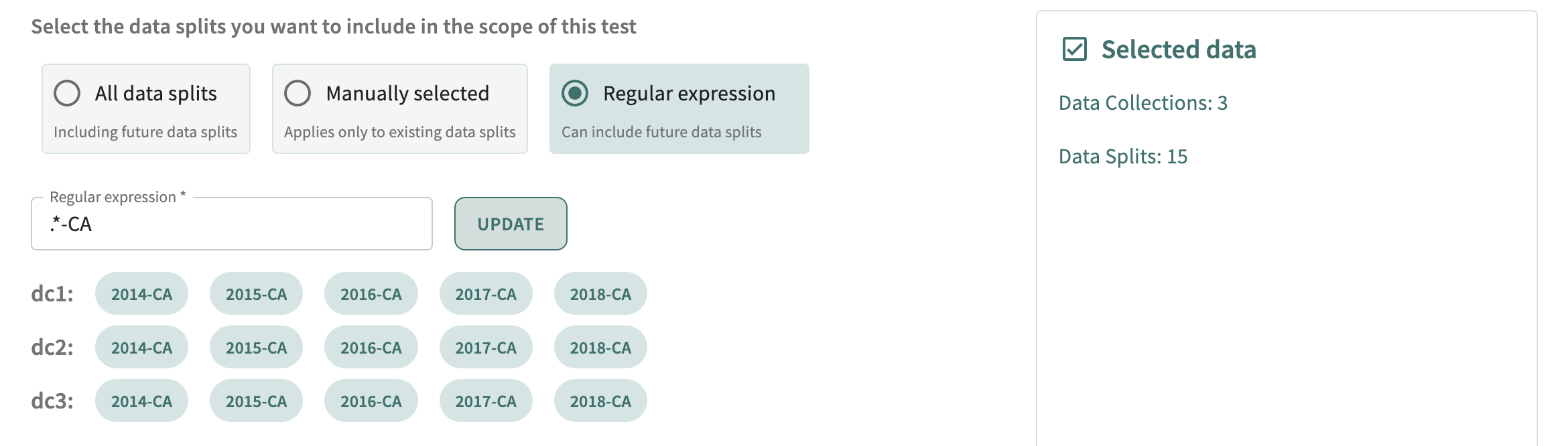

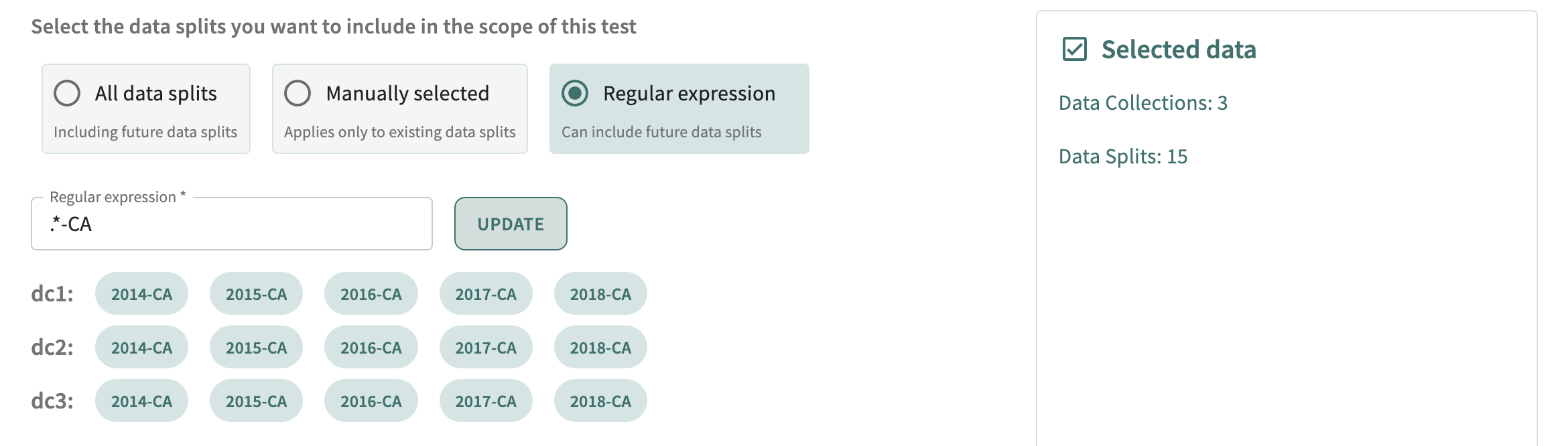

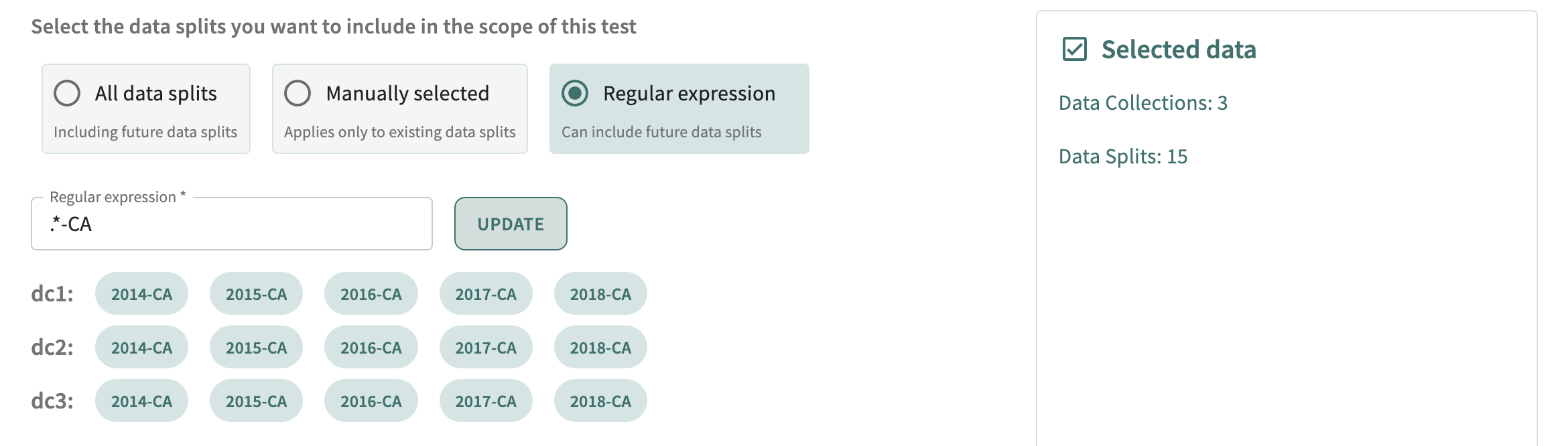

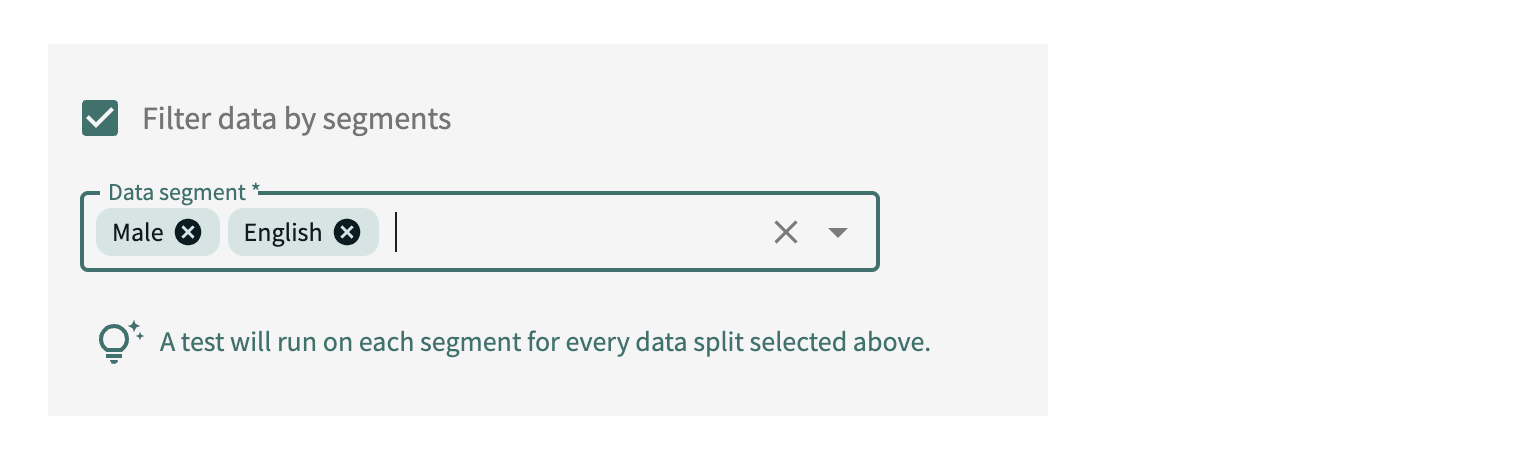

Finally, select Regular expression to run the test on all data splits, both existing and those defined later, with names matching the regular expression entered. Preview matching data splits by clicking UPDATE.

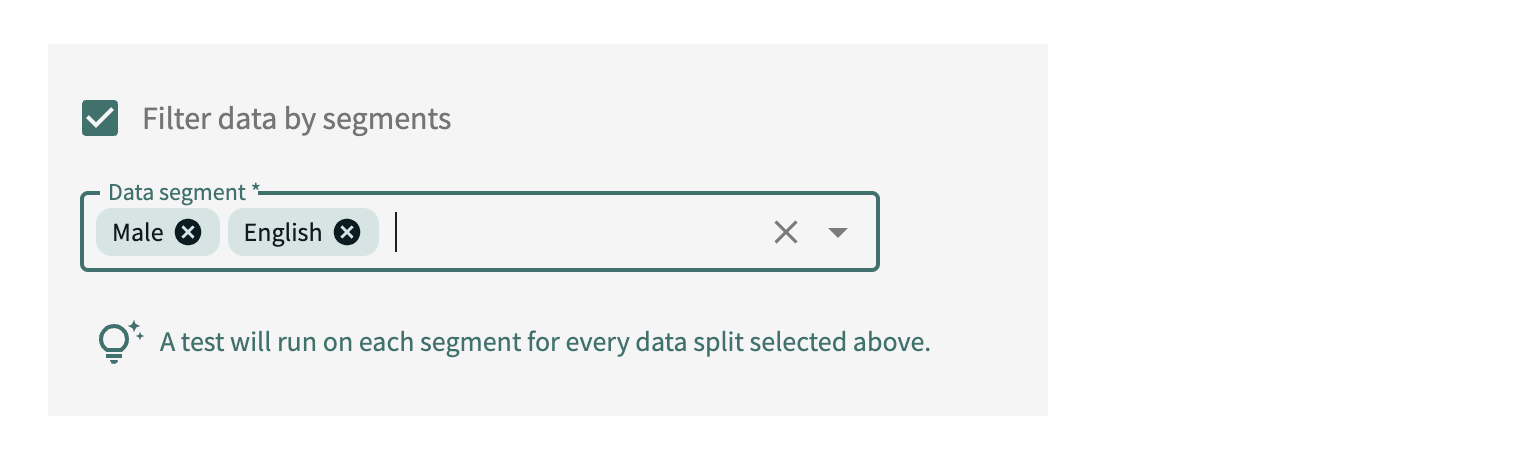

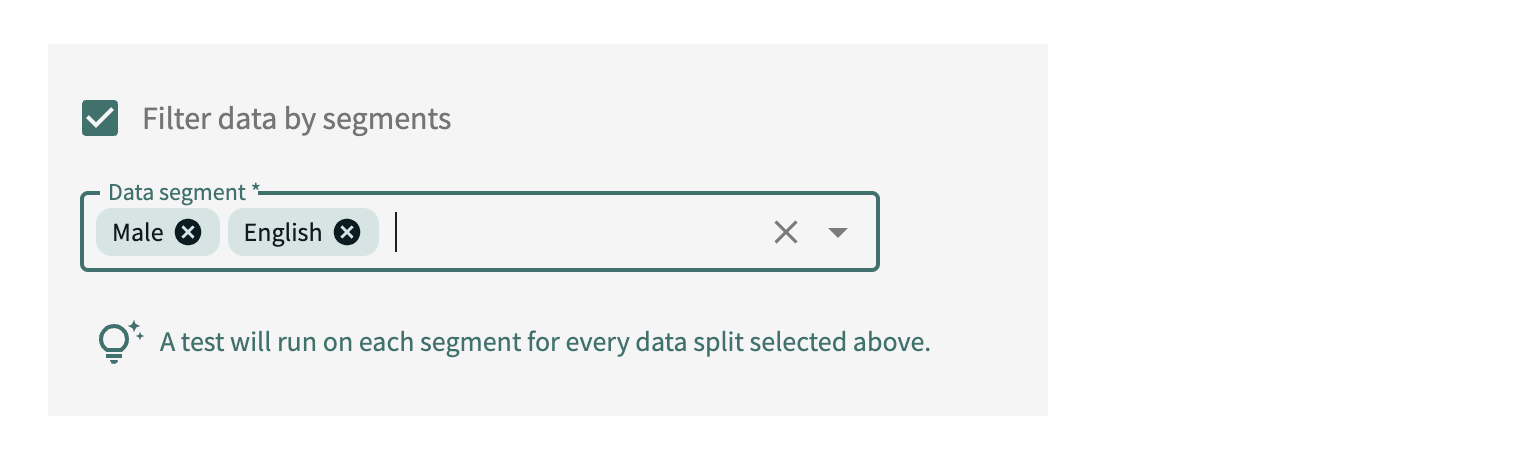

In this dialog, you can also select the segments on which to run the test. Simply check Filter data by segments, and select the segments.

Click SUBMIT to confirm these test parameters or click BACK to make changes.

Drift Tests¶

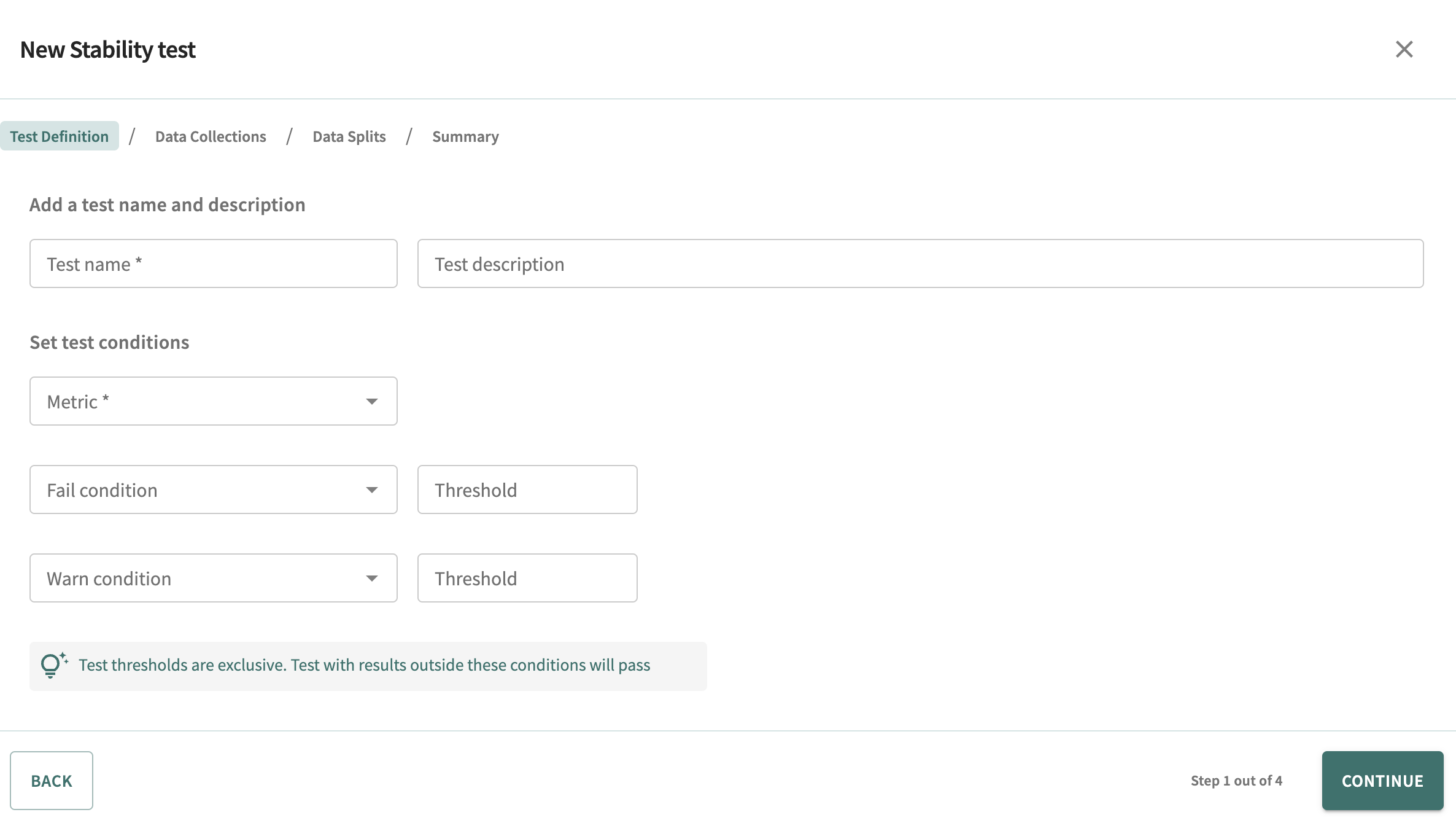

Upon selecting the Drift test option, the stability test creation wizard is displayed.

Enter the name of the test, an optional description, and select the Drift metric on which to measure the results.

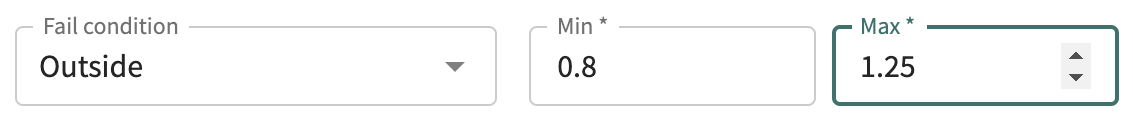

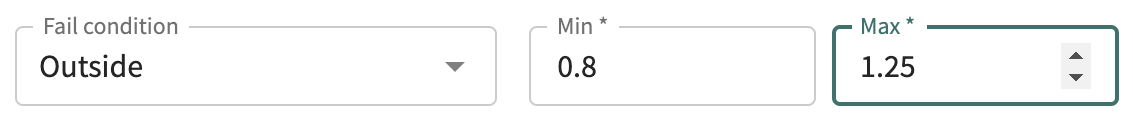

Define failure and/or warning conditions by selecting a condition, then adjusting the threshold as desired.

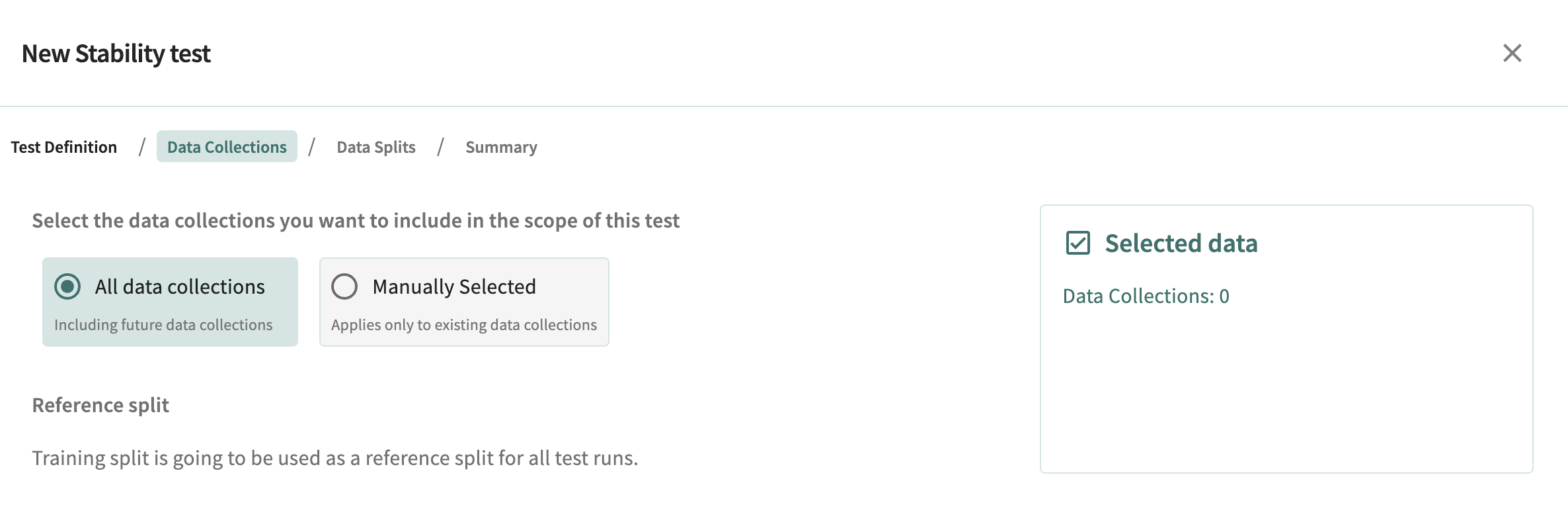

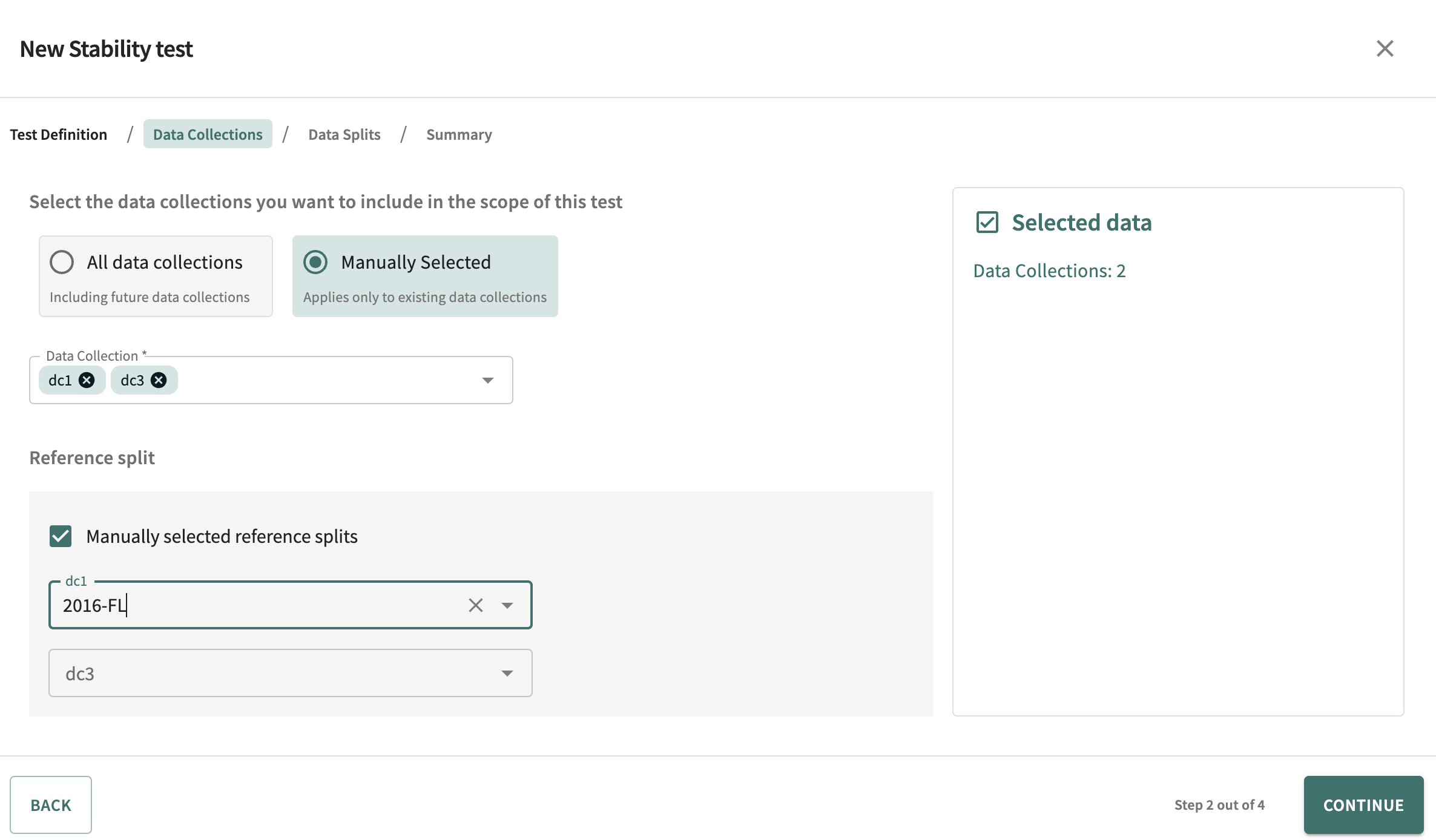

Click CONTINUE to select the data collection(s) on which the test will run and the reference splits for the selected data collection(s).

Select All data collections to run the test on all available data collections — those currently existing and those defined later — with respect to the splits/segments configured next. With this option, the reference split is automatically set to the respective training split for each data collection.

Choose Manually selected to select specific data collections for testing. Manual definition of the corresponding reference splits is available for each selected data collection(s).

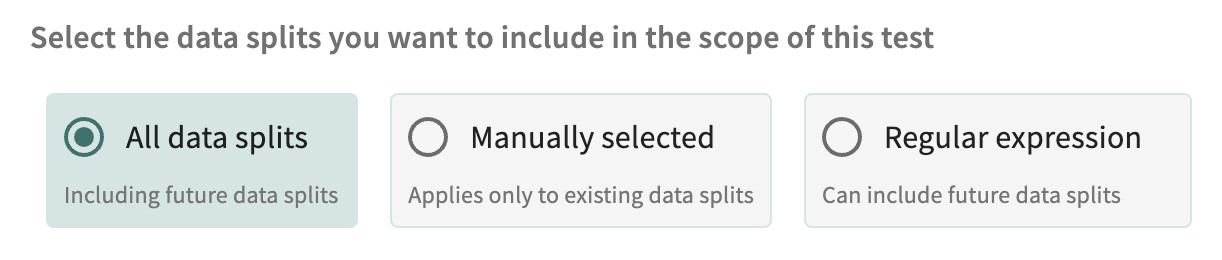

Click CONTINUE to select the test data split(s) and segment(s) with respect to previously defined data collections. Three options are available:

- All data splits

- Manual data splits (not available if you selected All data collections above)

- Data splits matching a regular expression.

Select All data splits to run the test on all data splits, both existing and subsequently defined. Select Manually selected to limit testing to the data splits manually chosen.

Finally, select Regular expression to run the test on all data splits, both existing and those defined later, with names matching the regular expression entered. Preview matching data splits by clicking UPDATE.

You can also select the segments to run the test on. Simply check Filter data by segments, then select the segments.

Click SUBMIT to confirm these test parameters or click BACK to make changes.

Fairness Tests¶

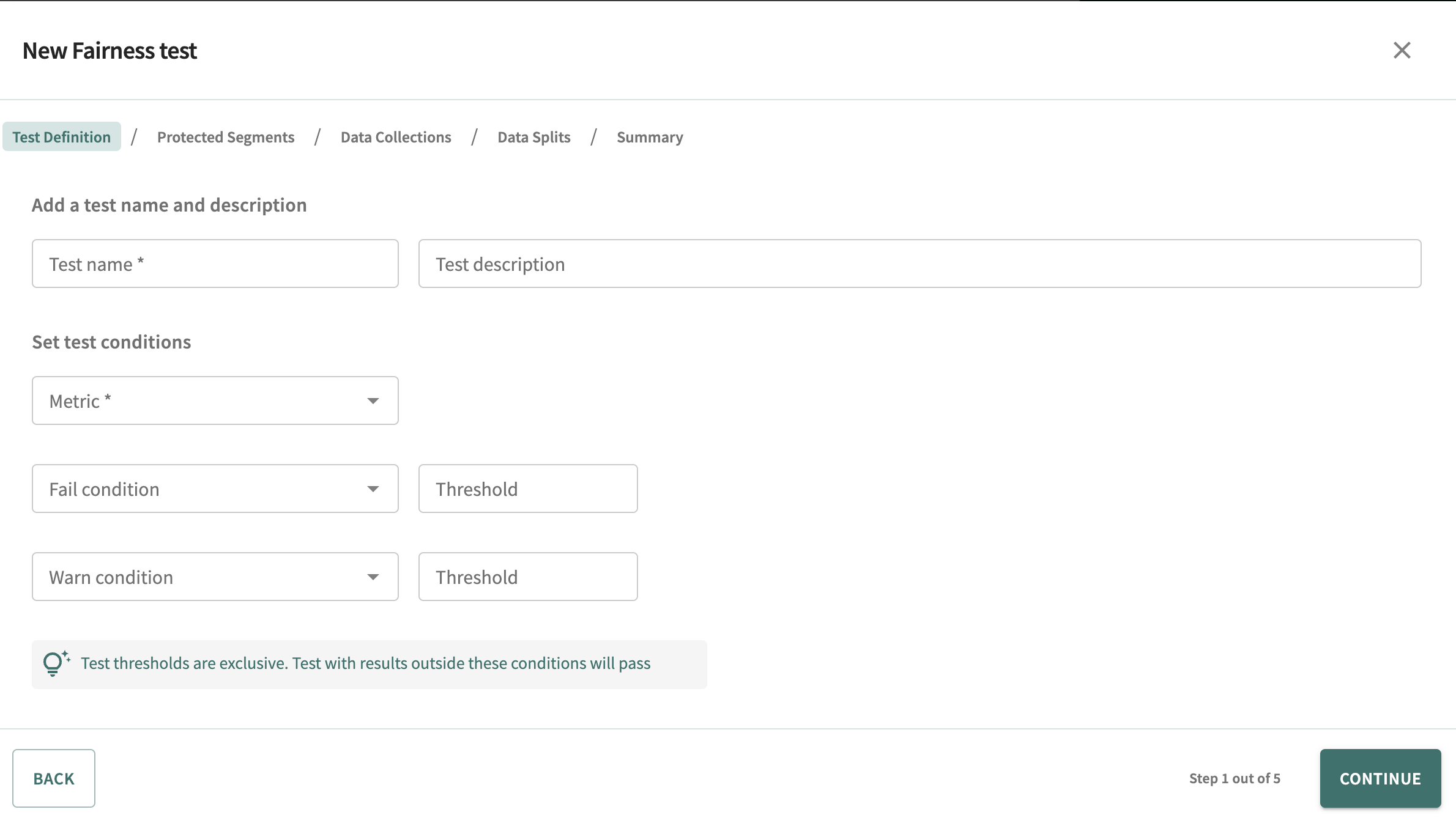

Upon selecting the Fairness test option, the fairness test creation wizard appears.

Enter the name of the test, an optional description, and then select the fairness metric on which to measure the results.

Define failure and/or warning conditions by selecting a condition, then adjusting the threshold as desired.

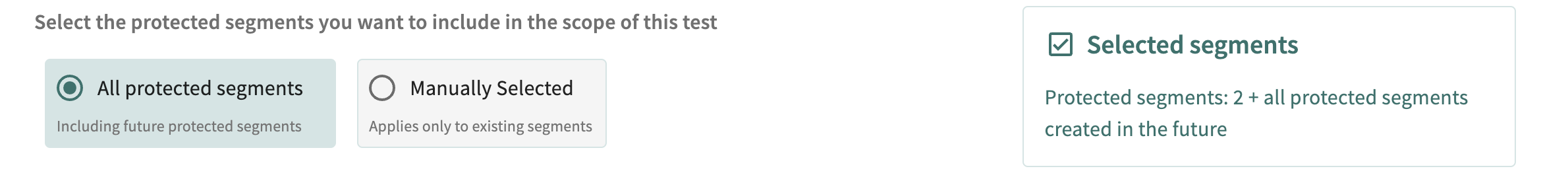

Click CONTINUE to select the protected segments for the test.

Select All protected segments to run the test on all available protected segments - those currently existing and those defined later with respect to the data collections/splits configured next. Choose Manually selected to limit testing to your desired protected segments.

Click CONTINUE to select the data collection(s) on which the test will run.

Select All data collections to run the test on all available data collections — those currently existing and those defined later — with respect to the splits/segments configured next. Choose Manually selected to select specific data collections for testing.

Click CONTINUE to select the test data split(s) and segment(s) with respect to previously defined data collections. Three options are available:

- All data splits

- Manual data splits (not available if you selected All data collections above)

- Data splits matching a regular expression.

Select All data splits to run the test on all data splits, both existing and subsequently defined. Select Manually selected to limit testing to the data splits manually chosen.

Finally, select Regular expression to run the test with names matching the regular expression entered on all data splits, both existing and those defined later. Preview matching data splits by clicking UPDATE.

Click SUBMIT to confirm these test parameters or click BACK to make changes.

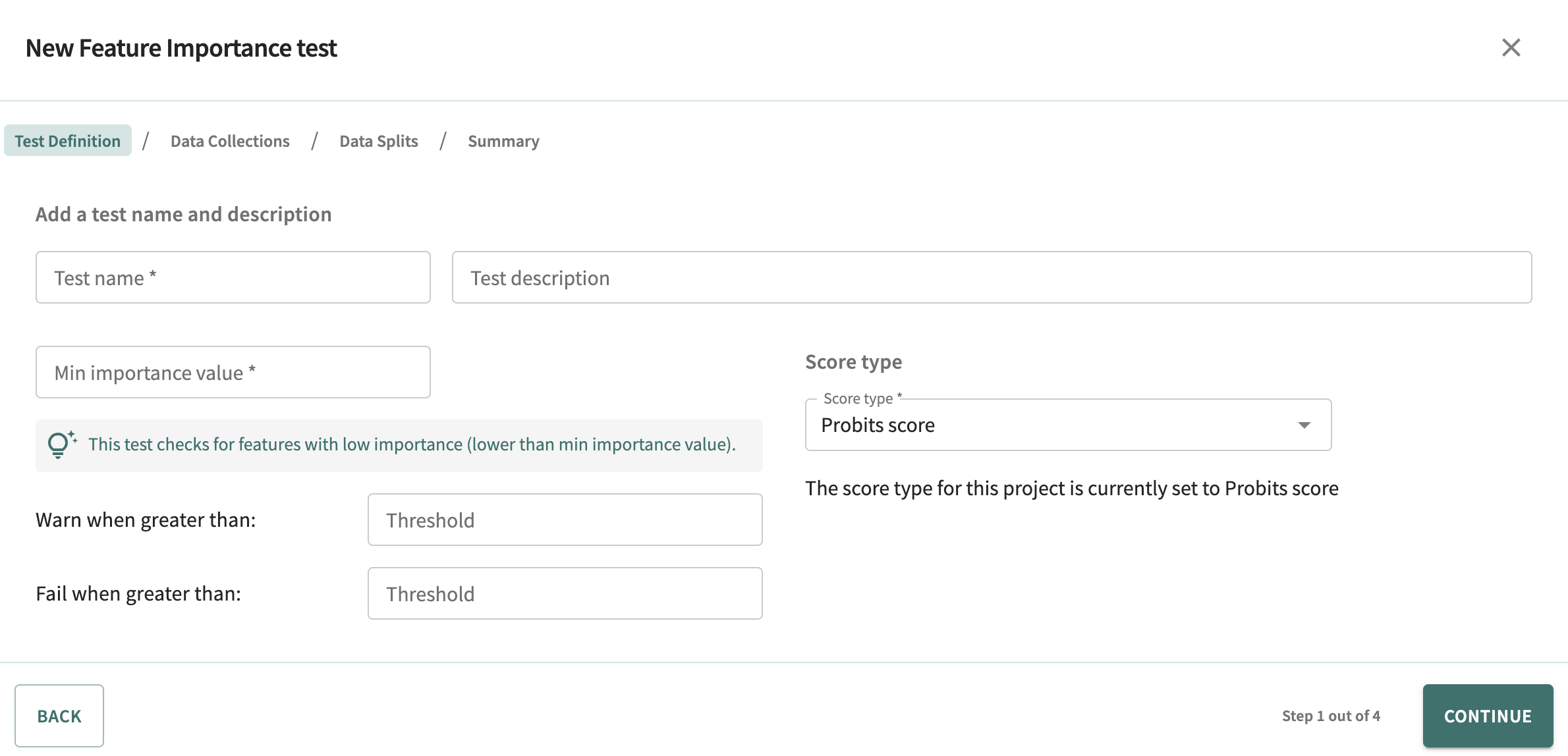

Feature importance Tests¶

Upon selecting the Feature importance test option, the Feature importance test creation wizard is displayed.

Enter the name of the test, an optional description, set the minimum importance value for features (between 0 and 1 exclusive), and set the score type (i.e. quantity of interest) for this test.

For feature importance tests, define failure and/or warning conditions by specifying the threshold number of unimportant features in our models. This has to be an integer quantity.

Click CONTINUE to select the data collection(s) on which the test will run.

Select All data collections to run the test on all available data collections — those currently existing and those defined later — with respect to the splits/segments configured next. Choose Manually selected to select specific data collections for testing.

Click CONTINUE to select the test data split(s) and segment(s) with respect to previously defined data collections. Three options are available:

- All data splits

- Manual data splits (not available if you selected All data collections above)

- Data splits matching a regular expression.

Select All data splits to run the test on all data splits, both existing and subsequently defined. Select Manually selected to limit testing to the data splits manually chosen.

Finally, select Regular expression to run the test on all data splits, both existing and those defined later, with names matching the regular expression entered. Preview matching data splits by clicking UPDATE.

You can also select the segments to run the test on. Simply check Filter data by segments, and select the segments.

Click SUBMIT to confirm these test parameters or click BACK to make changes

Viewing Your Model Tests¶

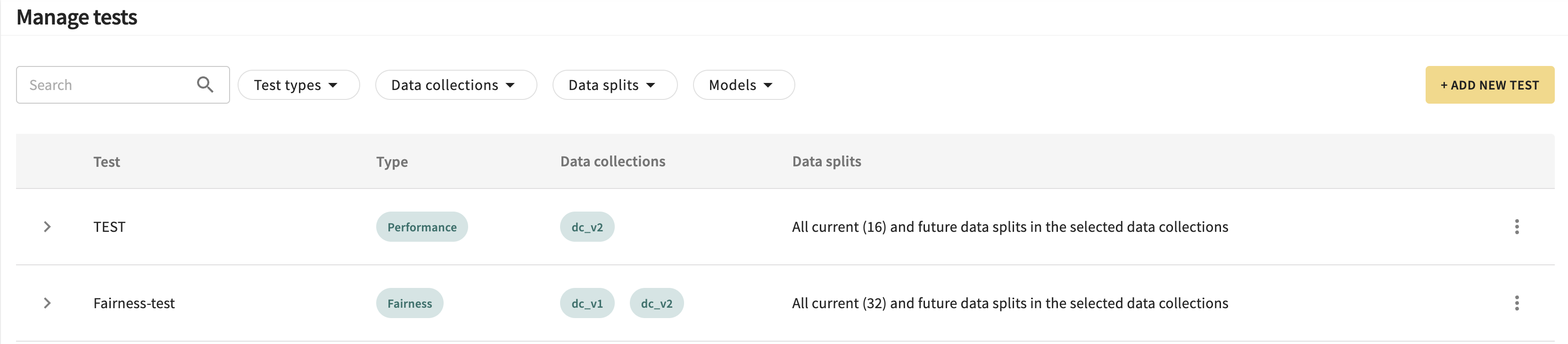

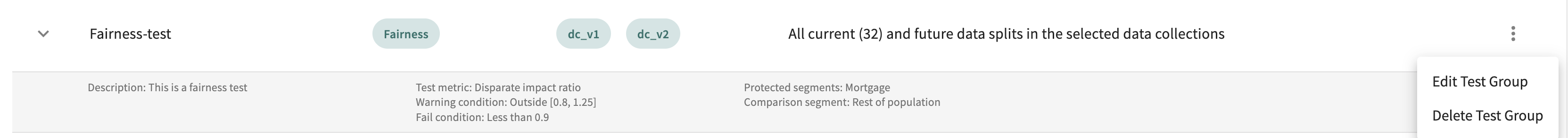

When one or more tests haven been defined, the test harness page displays them in a teble.

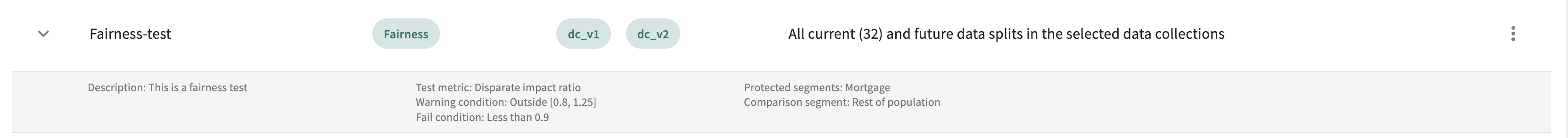

You can filter the list by test name, type, as well as data collections, splits, and models. Expanding any row allows you to view the metadata of the test.

Opening the menu on the end of a row allows you to edit tests as well as delete tests.

Editing Your Model Tests¶

Selecting Edit test group from the menu at the end of a row allows you to edit a test, which brings up the wizard corresponding to the type of test. Completing the flow and clicking Submit updates the test.

Click Next below to continue.