Features

Called "Features" page up to 1.42 release

Data scientists like to organize things into tables, where a row is an instance/record/ observation/trial representing a single datapoint on a graph, i.e., the person or thing (a single unit thereof) being measured. Each column is an applicable feature or variable (a characteristic) of the row entry — name, age, weight, education, temperature, viscosity, voltage, salinity ... you name it.

But what does it mean?

It means that features constitute what your dataset is comparing, row by row.

It also means that the quality of the features in your dataset impacts the efficacy of the insights gained when the dataset is used for machine learning. Business problems within the same industry do not necessarily require the same features. Everything that can be measured may not be necessary to answer the business problem posed, making it important to have a strong understanding of the business goals and priorities of your modeling project before merely including all available features, i.e., all measurable attributes. Certain attributes simply are not relevant to the analysis being undertaken. In other words, it's often a fine line that separates "too much" from "not enough."

Hence, it's important to weigh the quality of your dataset’s features and their pertinence/relevance to the business problem at hand. Feature selection and feature engineering, notoriously difficult and tedious, are therefore vital to the verity of your model's results. Done well, your continually optimized dataset should contain only those features having a bearing on the problem you're trying to solve. Including more could impose an undue influence on the model. Including less could skew the results in an entirely different way.

TruEra's Explainability diagnostics can help you determine the "Goldilocks Zone" for your model — not too much, not too little — just right. It also helps users determine if the behavior learnt by the model for a particulare feature is expected as per human/expert intuition.

Assessing Feature Behavior¶

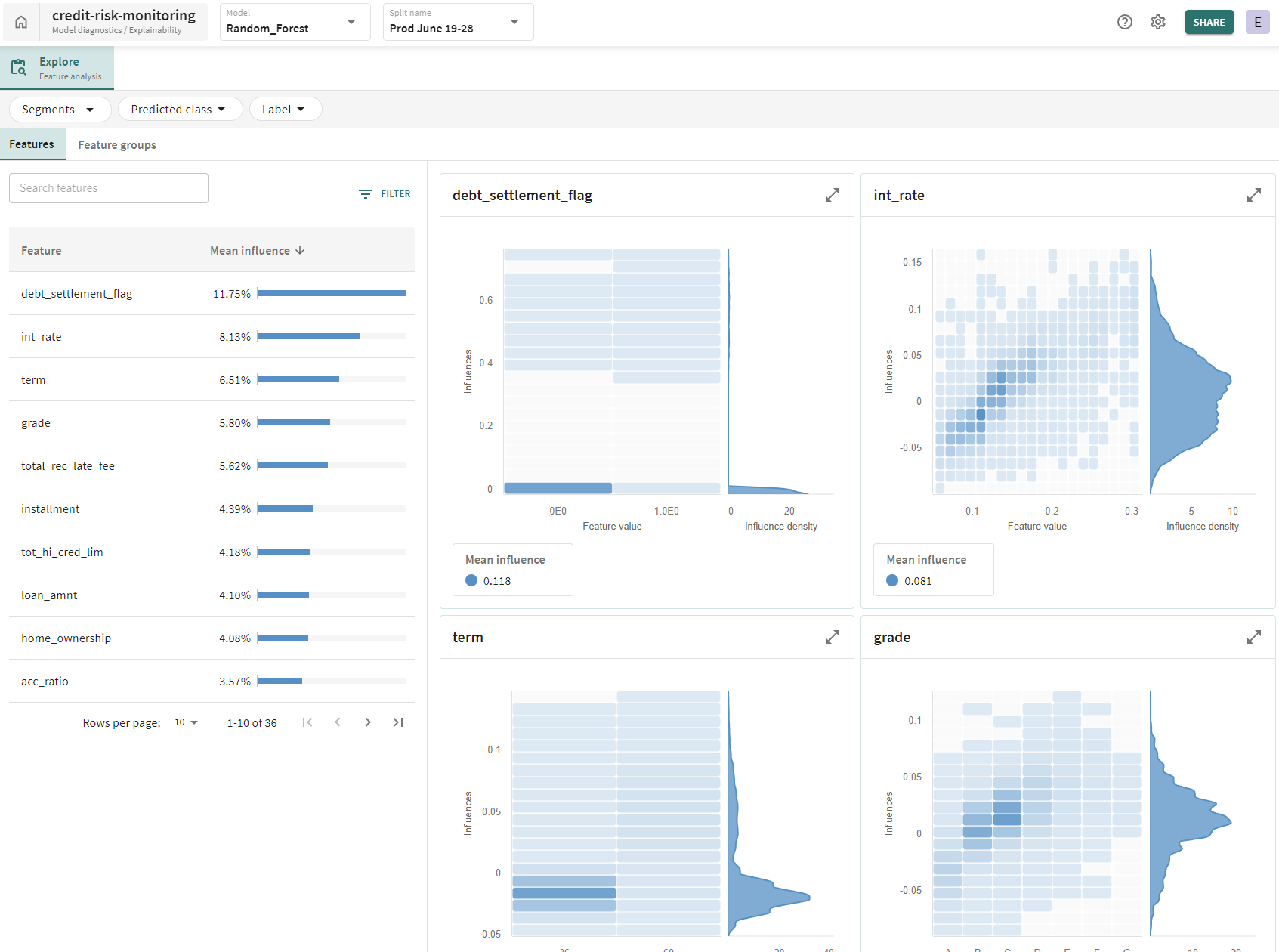

To begin, click Explainability under Diagnostics in the nav panel on the left. This displays a page headed by two tabs: Features, and Feature Groups.

Insight gained at this level of analysis involves the influence of input variables on outputs, measuring the model's behavior in terms of input value change, noise tolerance, data quality, internal structure, and more, as a means of uncovering abnormal or unexpected behavior.

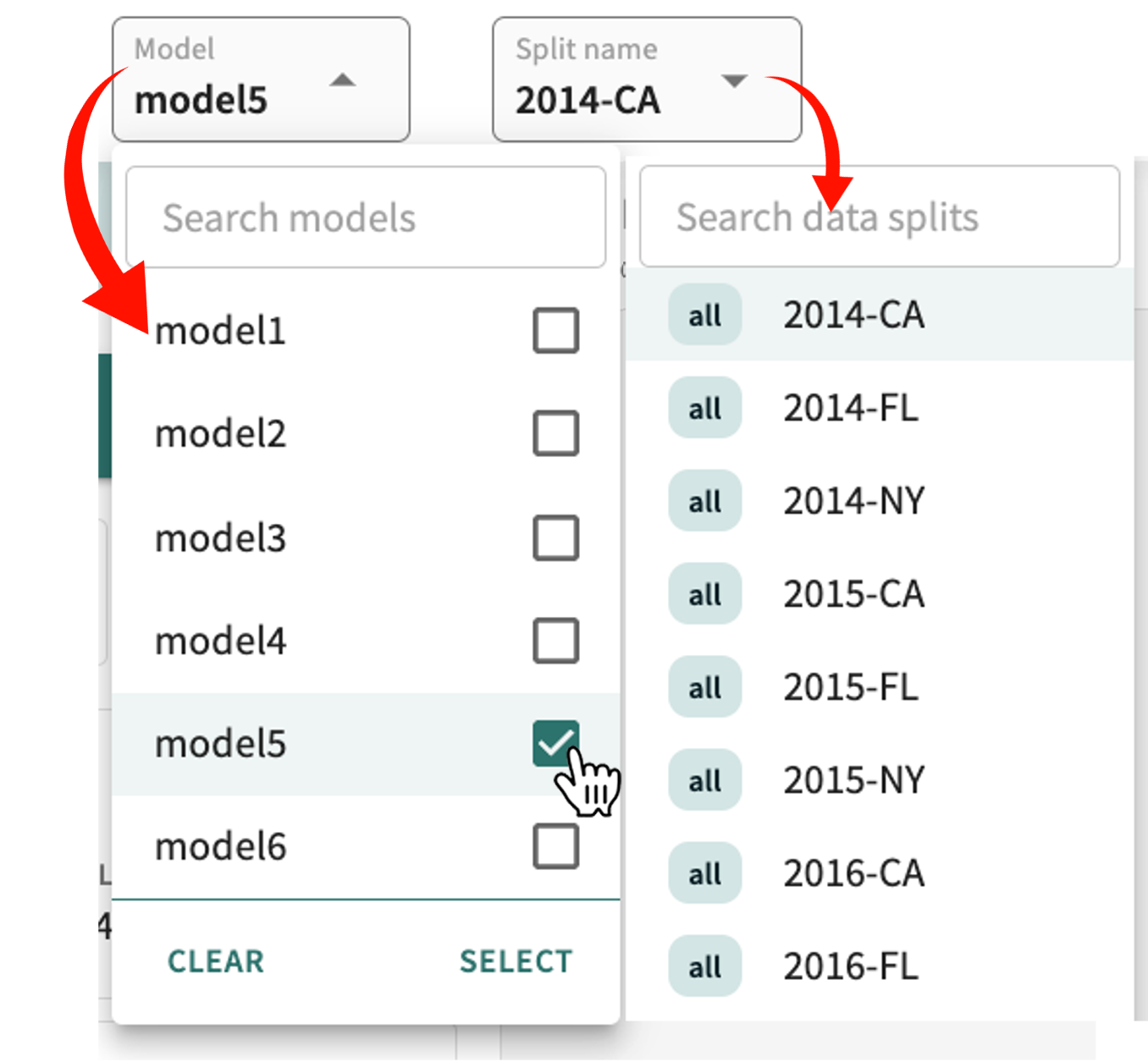

Pictured above, the left-side panel under the Features tab contains the current list of features for the selected Model and Split name. (Remember, you can change the model and/or split at any time.) You can click on a feature to enlarge the graph.

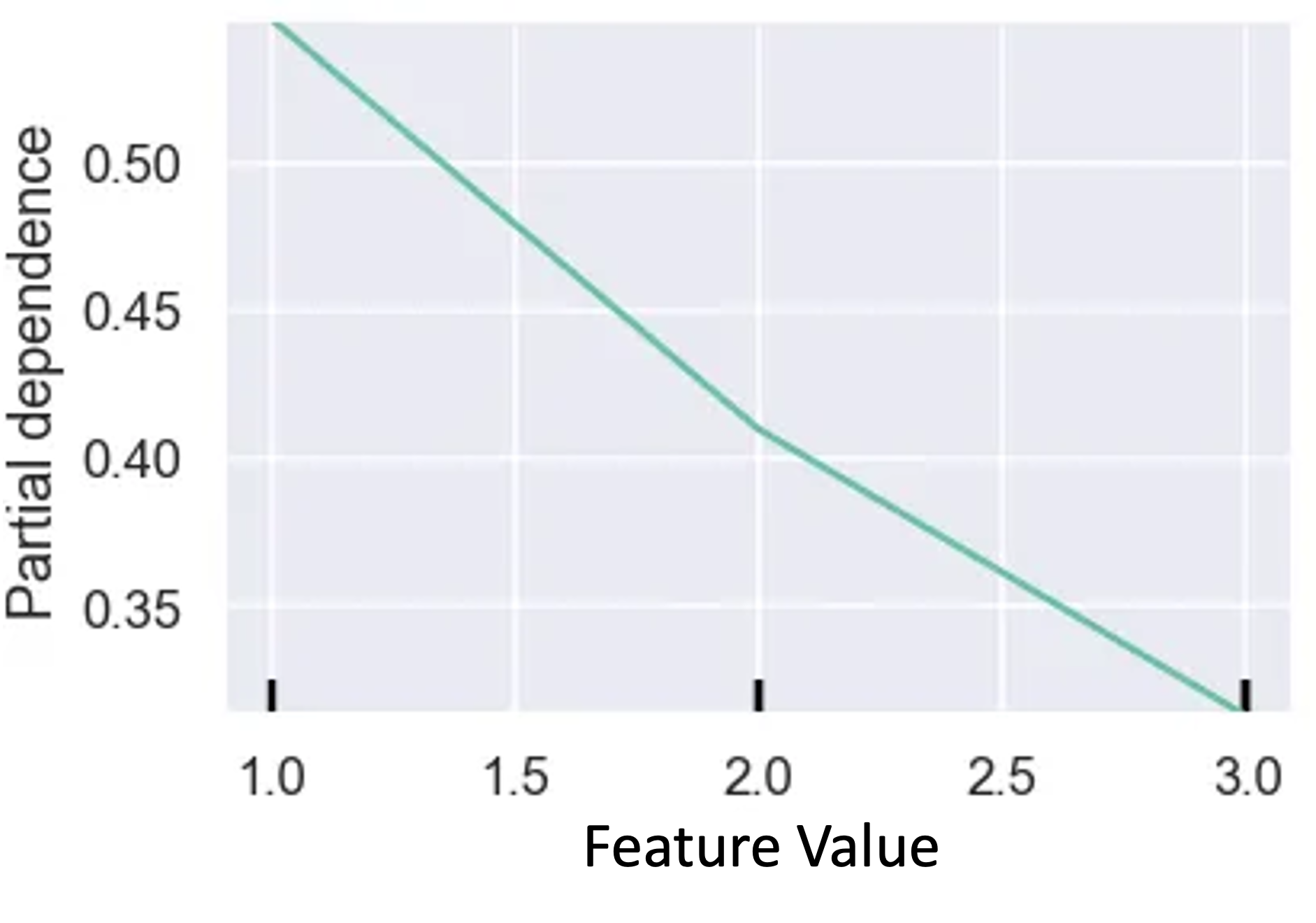

Influence Sensitivity Plots¶

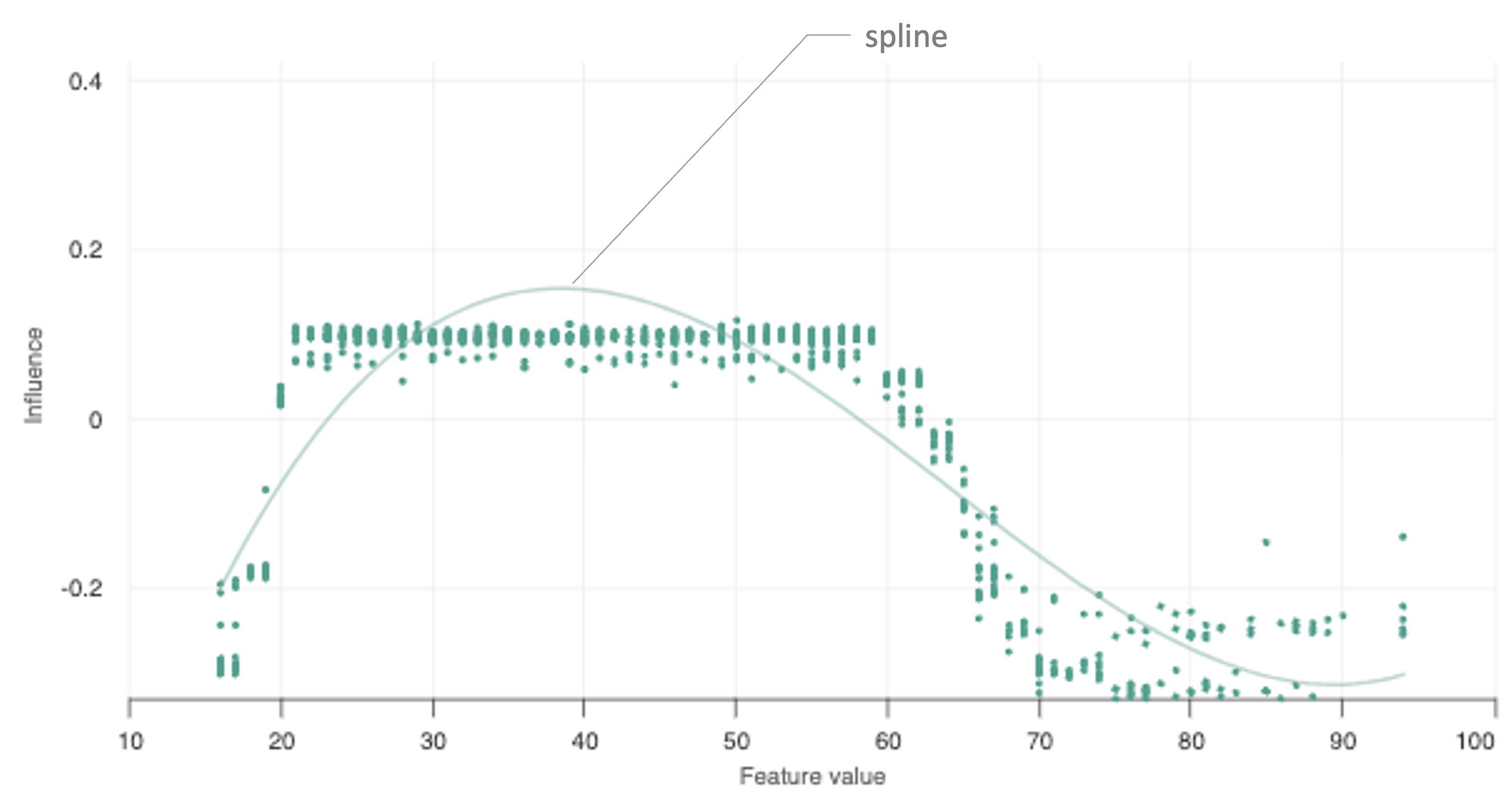

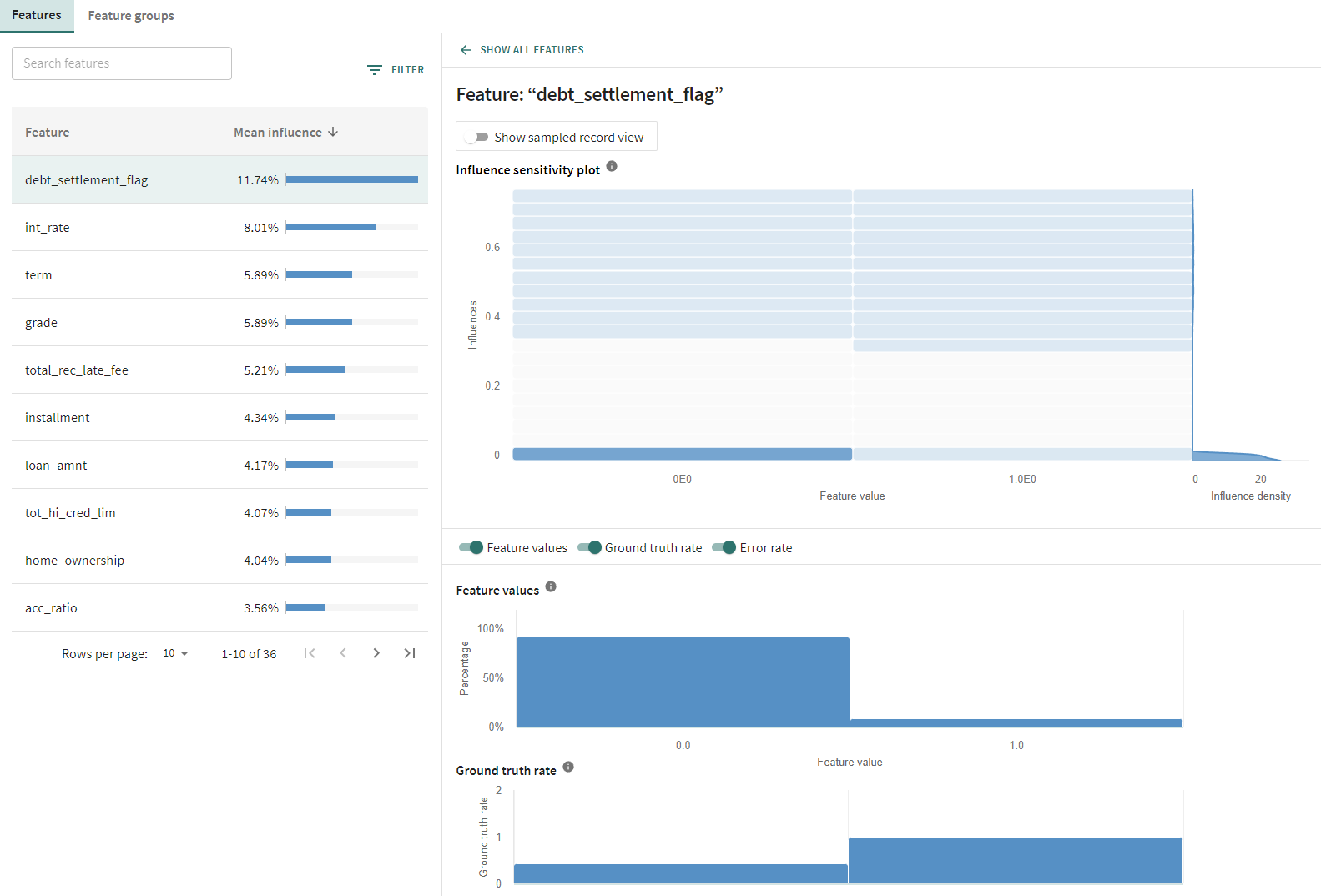

Here, the right-side panel shows greater detail for the selected feature in the form of an Influence Sensitivity Plot (ISP). ISPs show the relationship between a feature’s value and its contribution to the model output. Added in composite overlay to contextualize the ISP by showing the influence density (shown on the right).

You can also turn on the Show sampled record view to see point-wise influence visualization for a random sample of 1000 - 10,000 records (configurable in project setting) from your selected split.

When enabled within an ISP, you can also examine (click a link for additional detail):

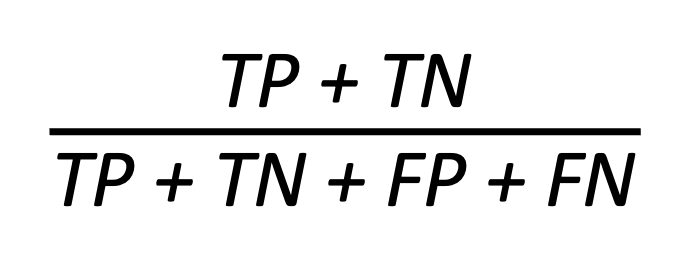

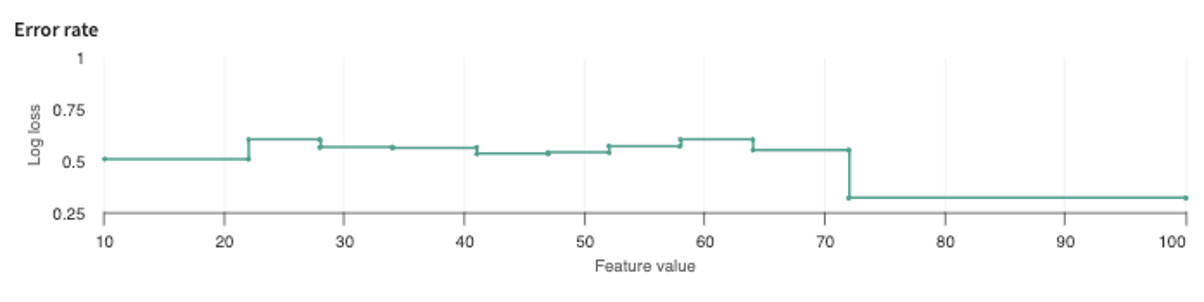

- Error rate – shows the model's error for bucketized ranges of the feature's value, which can help contextualize the ISP by sanity checking regions against the error rate graph to see as the influence changes does the error rate also change which can help understand whether this feature is aiding or hindering the accuracy of the model.

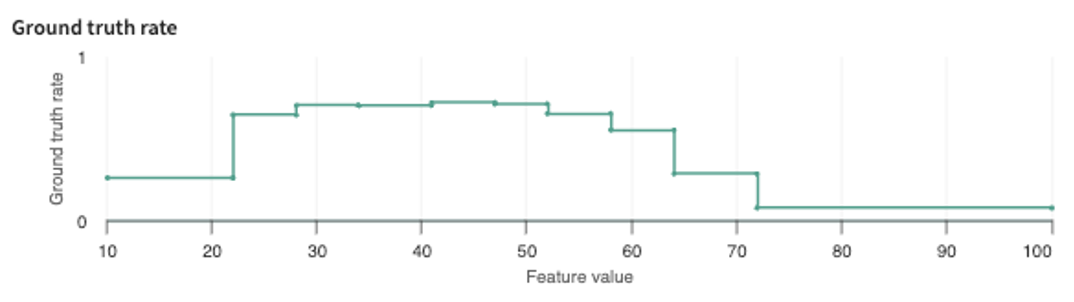

- Ground truth rate – shows the ground truth rate of the given data split for bucketized ranges of the feature's value. This helps contextualize the ISP with a sanity check of feature behavior for regions associated with ground truth labels and see if the influence relationship correlates to the ground truth in the data. Ex: If as the feature value increases, the influence increases towards a particular class, it would be reasonable to expect (not always) that ground truth for that class also increases

Additionally in the sampled record view, you can also examine

- Best-fit spline – shows a polynomial trend line that is fit to the ISP to tease out a clear relationship between a feature's value and its contribution to the model score.

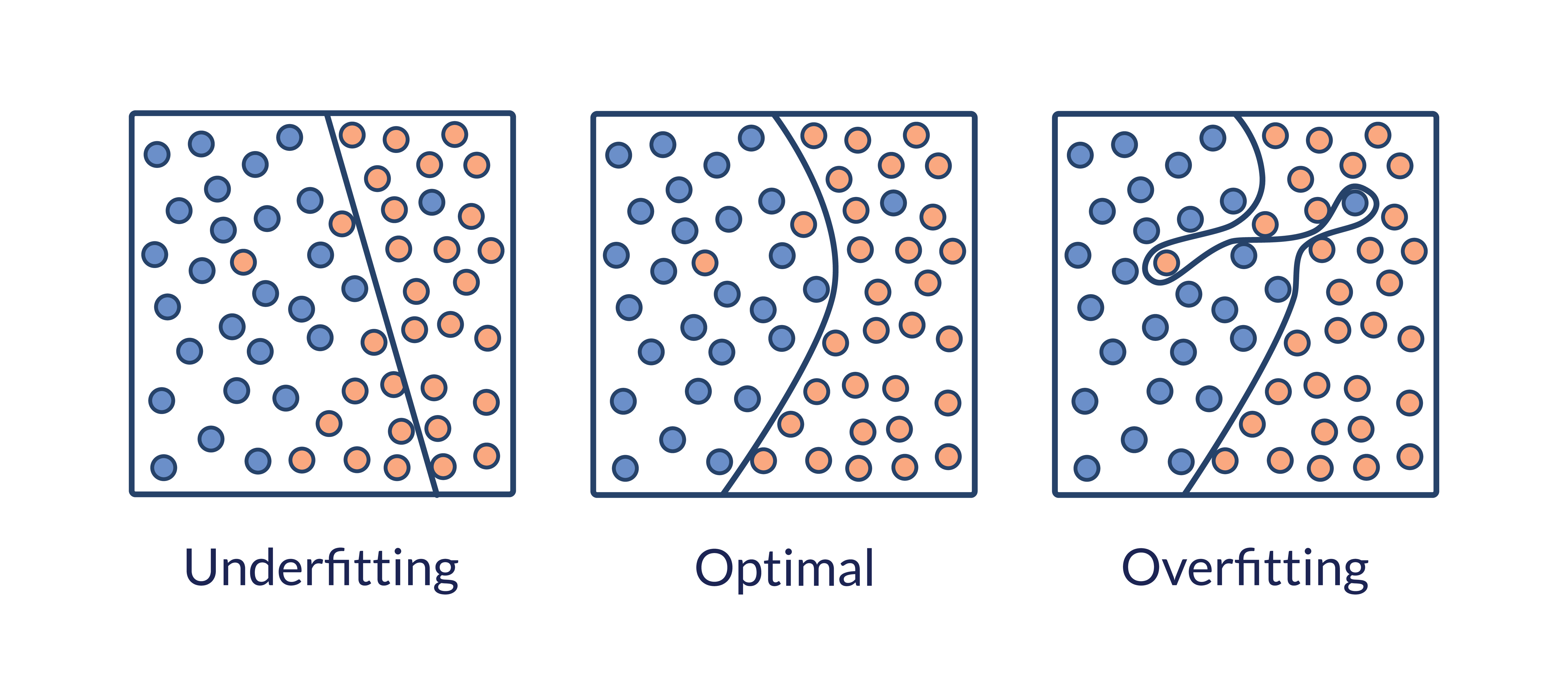

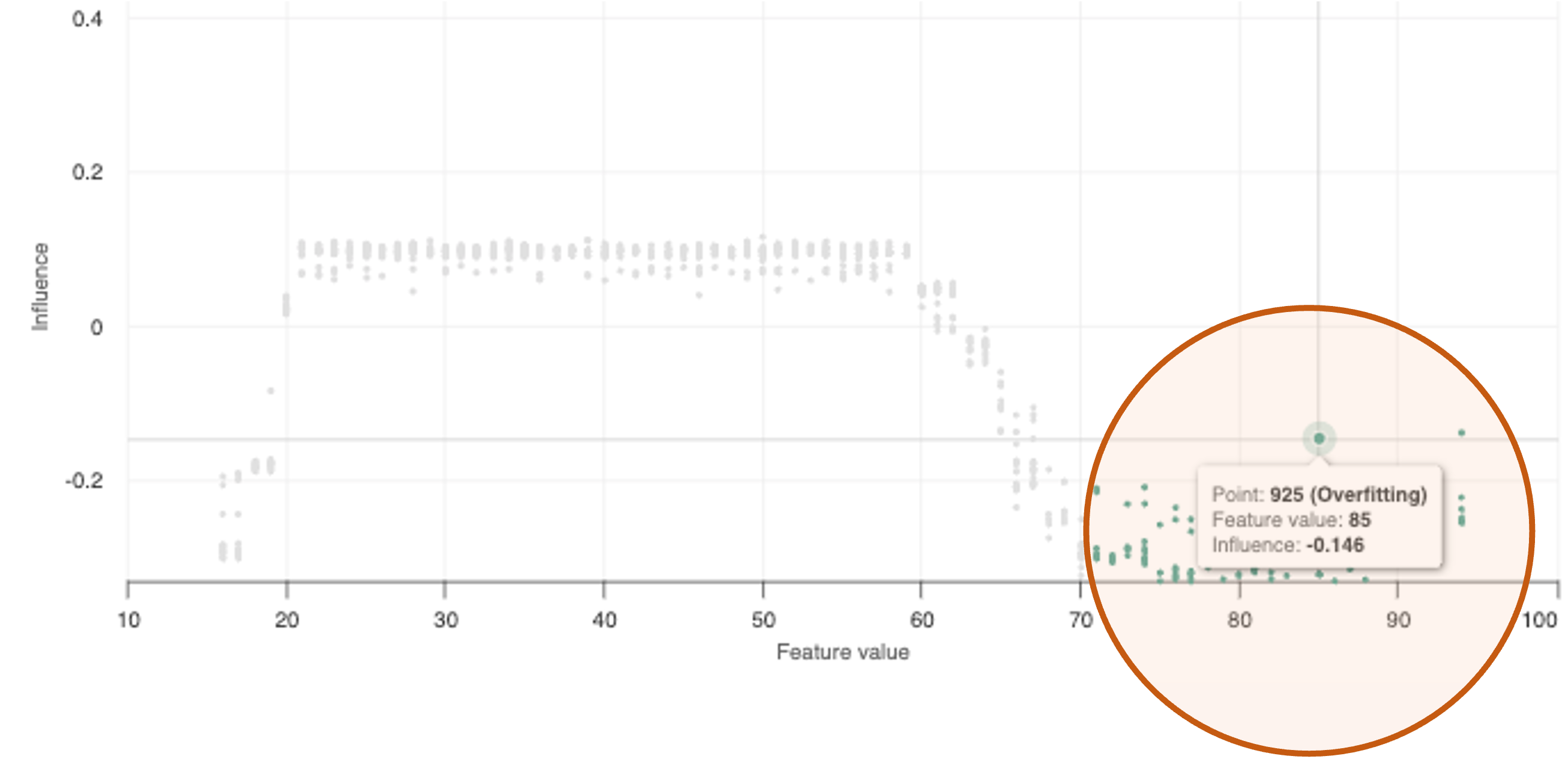

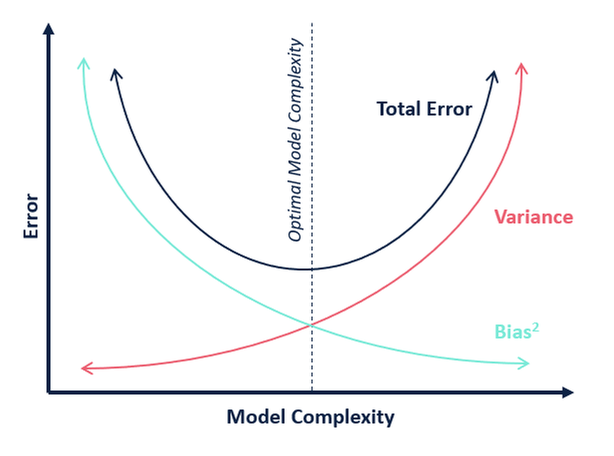

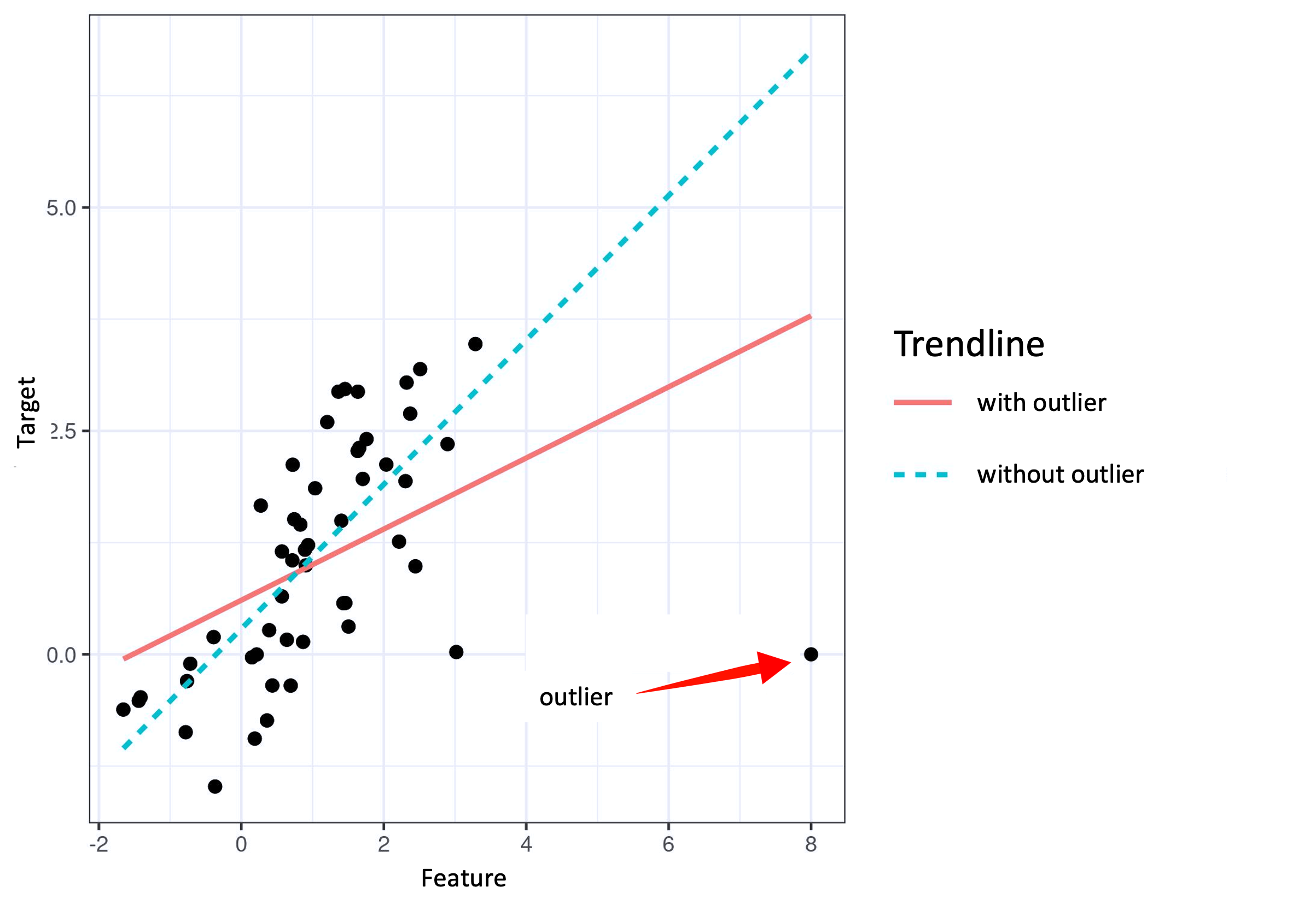

- Overfitting records – identify influential points of the ISP occurring in low-density regions. Points that drive model scores despite occurring in low-density regions can indicate overfitting.

How is overfitting calculated?

The overfitting diagnostic identifies influential points which occur in low-density or sparse regions of data. Data points with feature values in regions with less than 3% of the data population and with influences at or above the 95th percentile are classified as overfitting. This indicates that the feature value of the point in question is driving a model's prediction, despite being a low-density region of data. If the model overfits on these low-density regions of data, it can fail to generalize, leading to poor test and production performance.

Click SHOW ALL FEATURES to return to the ISPs for all features.

Feature Groups¶

Feature groups comprise individual features that are closely related or which capture similar information (e.g., "demographic features"). When ingested with the model (optional), these groups of features are displayed by group importance under the Feature Groups tab. Hence, if your model has features which are closely related or otherwise reflect shared characteristics, the computed influences in the final model outcome are scored for each feature group rather than for each individual feature. This is especially valuable when the selected split includes a high number of features.

Filtering by Segments¶

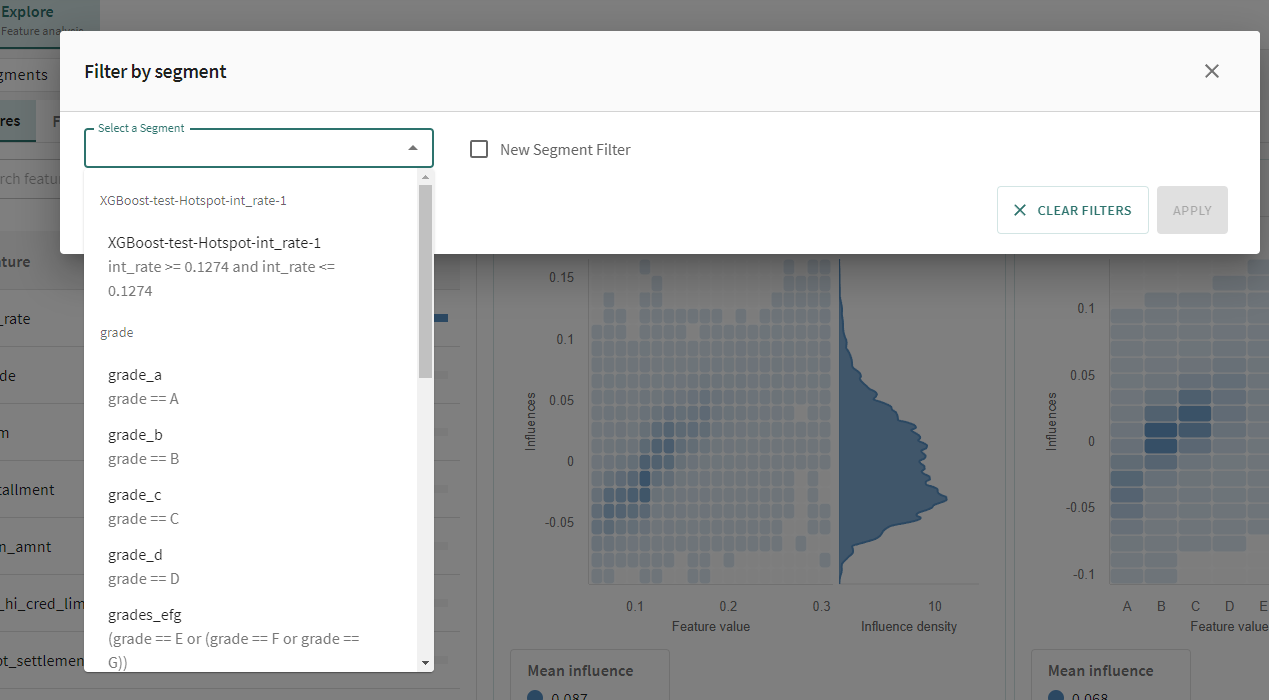

Accessible above feature diagnostic tabs is a drop-down control called Segments.

Assuming you've already defined segments for your model, click Segments. Here, you'll find your defined Segment groups for the selected model. To reset your filtering criteria at any time, click Clear Filters.

Otherwise, click on New Segment Filter and define a segment.

Click Next below to continue.