Feature Importance

Measures how much each feature contributes to a model's predictions.Tests Using TruEra's Python SDK¶

Like the Web App, the SDK supports four types of model tests:

Let's take a closer look at each type in turn.

Performance Tests¶

A performance test computes the correctness of model predictions on a set of data, evaluating the model against user-defined numerical thresholds, which can serve as either a warning or the failure condition of the test. A performance test consists of three parts:

- A set of data points, either a full data split or a segment.

- A performance metric (see performance metrics)

- An optional failure and/or warning condition for the test.

Defining Performance Tests¶

A performance test can be defined using the add_performance_test function in the Tester class (you can access an instance of this class under tru.tester). Here are a few examples:

# Performance test on multiple data splits with a single value threshold

tru.tester.add_performance_test(

test_name="Accuracy Test",

data_split_names=["split1_name", "split2_name"],

metric="CLASSIFICATION_ACCURACY",

warn_if_less_than=0.85,

fail_if_less_than=0.82

)

# Alternative, we can also specify data split name using regex

tru.tester.add_performance_test(

test_name="Accuracy Test",

data_split_name_regex=".*-California", # this test will be run on all data splits where the name ends with "-California"

all_data_collections=True, # this test will be applicable to all data collections

metric="CLASSIFICATION_ACCURACY",

warn_if_less_than=0.85,

fail_if_less_than=0.82

)

# Performance test using a segment with a single value threshold

tru.tester.add_performance_test(

test_name="Accuracy Test",

data_split_names=["split1_name", "split2_name"],

segment_group_name="segment_group_name",

segment_name="segment_name",

metric="FALSE_POSITIVE_RATE",

warn_if_greater_than=0.05,

fail_if_greater_than=0.1

)

# Performance test with a range threshold

tru.tester.add_performance_test(

test_name="Accuracy Test",

data_split_names=["split1_name", "split2_name"],

metric="FALSE_NEGATIVE_RATE",

warn_if_outside=(0.05, 0.1),

fail_if_outside=(0.02, 0.15)

)

The threshold of a performance test can be edited by calling the same function with the flag overwrite=True.

Performance Tests with Relative Thresholds¶

In addition to defining the warning and/or failure thresholds using absolute values as discussed above, thresholds for performance tests can also be defined relative to the metric result on a reference split. For example, rather than testing whether the classification accuracy of models on split A is >= 90% (by specifying fail_if_less_than=0.9 when the test is added), a relative threshold allows users to check on the accuracy of models to determine if split A is greater than or equal to the accuracy of models on split B (i.e., the reference split) + an offset (by specifying fail_if_less_than=offset and reference_split_name="B").

Here are some examples of defining performance tests with relative thresholds:

# Explicitly specifying the reference split of a RELATIVE threshold

tru.tester.add_performance_test(

test_name="Accuracy Test",

data_split_names=["split1_name", "split2_name"],

metric="CLASSIFICATION_ACCURACY",

warn_if_less_than=-0.05,

warn_threshold_type="RELATIVE",

fail_if_less_than=-0.08,

fail_threshold_type="RELATIVE",

reference_split_name="reference_split_name"

)

# Not explicitly specifying the reference split on a RELATIVE threshold means

# the reference split is each model's train split

tru.tester.add_performance_test(

test_name="Accuracy Test",

data_split_names=["split1_name", "split2_name"],

metric="FALSE_POSITIVE_RATE",

warn_if_greater_than=0.02,

warn_threshold_type="RELATIVE",

fail_if_greater_than=0.021,

fail_threshold_type="RELATIVE"

)

# RELATIVE test using reference model instead of reference split

tru.tester.add_performance_test(

test_name="Accuracy Test",

data_split_names=["split1_name", "split2_name"],

metric="CLASSIFICATION_ACCURACY",

warn_if_less_than=0,

warn_threshold_type="RELATIVE",

fail_if_less_than=-0.01,

fail_threshold_type="RELATIVE",

reference_model_name="reference_model_name"

# RELATIVE test using both reference model and reference split

tru.tester.add_performance_test(

test_name="Accuracy Test",

data_split_names=["split1_name", "split2_name"],

metric="CLASSIFICATION_ACCURACY",

warn_if_less_than=0,

warn_threshold_type="RELATIVE",

fail_if_less_than=-0.01,

fail_threshold_type="RELATIVE",

reference_model_name="reference_model_name",

reference_split_name="reference_split_name"

)

See Viewing List of Defined Tests for the list of performance tests defined. See Getting Test Results for guidance on obtaining the outcome of performance tests.

Fairness Tests¶

A fairness test computes a fairness metric for your model(s) based on a set of data. It then evaluates it against user-defined numerical thresholds which can serve as either the warning or the failure condition of the test. A fairness test consists of five parts, enumerated as follows:

- Data split

- Protected segment to check fairness

- Comparison segment against which to check fairness (optional; the complement of the protected segment is used by default)

- Fairness metric

- Optional failure and/or warning condition for the test.

A fairness test is defined using the add_fairness_test function of the Tester class. You can access an instance of this class under tru.tester.

Here are some examples:

# Explicitly specifying comparison segment

tru.tester.add_fairness_test(

test_name="Fairness Test",

data_split_names=["split1_name", "split2_name"],

protected_segments=[("segment_group_name", "protected_segment_name")],

comparison_segments=[("segment_group_name", "comparison_segment_name")],

comparison_segment_name=<comparison segment name>,

metric="DISPARATE_IMPACT_RATIO",

warn_if_outside=[0.8, 1.25],

fail_if_outside=[0.5, 2]

)

# Not specifying comparison segment means the complement of the protected segment

# will be used as comparison

tru.tester.add_fairness_test(

test_name="Fairness Test",

data_split_names=["split1_name", "split2_name"],

protected_segments=[("segment_group_name", "protected_segment_name")],

metric="DISPARATE_IMPACT_RATIO",

warn_if_outside=[0.9, 1.15],

fail_if_outside=[0.8, 1.25]

)

See Python SDK Reference for additional details.

The threshold of a fairness test can be edited by calling the same function with the flag overwrite=True.

See Viewing List of Defined Tests for the list of fairness tests defined. See Getting Test Results for guidance on obtaining the outcome of fairness tests.

Stability Tests¶

A stability test computes the model score drift between two sets of data, then evaluates it against user-defined numerical thresholds that serve as either the warning or the failure condition of the test.

Consequently, a stability test consists of three parts:

- Two sets of data to be used for the test (the comparison and the reference data split, both filtered by a segment)

- A drift metric

- An optional failure and/or warning condition for the test.

A stability test can be defined using the add_stability_test function in the function of the Tester class (access an instance of this class under tru.tester). Here's an example:

tru.tester.add_stability_test(

test_name="Stability Test",

comparison_data_split_names=["split1_name", "split2_name"],

base_data_split_name="reference_split_name",

metric="DIFFERENCE_OF_MEAN",

warn_if_outside=[-1, 1],

fail_if_outside=[-2, 2]

)

See the Python SDK Reference for additional details.

Otherwise, the threshold of a stability test is edited by calling the same function with the flag overwrite=True.

You can review the list of defined stability tests in Viewing List of Defined Tests. For guidance on obtaining the outcome of stability tests, see Getting Test Results.

Feature Importance Tests¶

min_importance_value) of a feature on a set of data, computing the number of features having a global importance value less than min_importance_value and evaluating it against user-defined numerical thresholds that serve as either the warning or the failure condition of a test.The five parts comprising a feature importance test are enumerated as follows:

- A set of data points, either a full data split or a segment

- Minimum global importance value

- Background data split

- Score type

- Optional failure and/or warning condition for the test.

The add_feature_importance_test function of the Tester class defines a feature importance test. You can access an instance of this class under tru.tester.

Here's an example:

tru.tester.add_feature_importance_test(

test_name="Feature Importance Test",

data_split_names=["split1_name", "split2_name"],

min_importance_value=0.01,

background_split_name="background split name",

score_type=<score_type>, # (e.g., "regression", or "logits"/"probits"

# for the classification project)

warn_if_greater_than=5,

fail_if_greater_than=10

)

See Python SDK Reference for additional details.

The threshold of a feature importance test can be edited by calling the same function with the flag overwrite=True.

See Viewing List of Defined Tests for the list of feature importance tests defined. See Getting Test Results for guidance on obtaining the outcome of feature importance tests.

Viewing Your List of Defined Tests¶

Use get_model_tests to see the list of tests defined for the data collection (additional details are available in the Python SDK Reference).

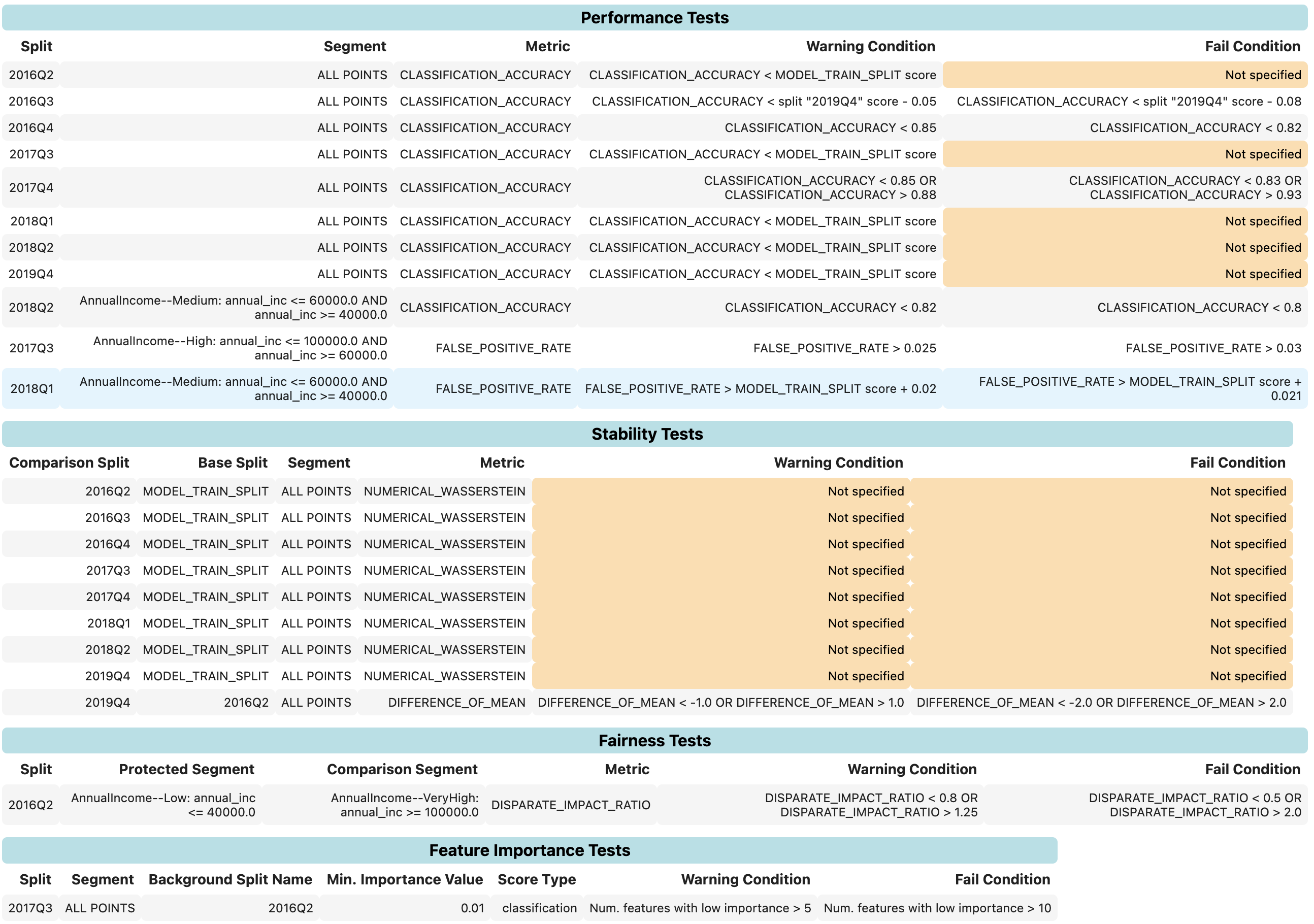

Output for this function in a Jupyter notebook will look something like the following:

The amber/orange highlights in the table above indicate thresholds not yet defined.

Getting Test Results¶

To see the results of a model test, use get_model_test_results. Be aware that calling this method will trigger the computations necessary to obtain the test results.

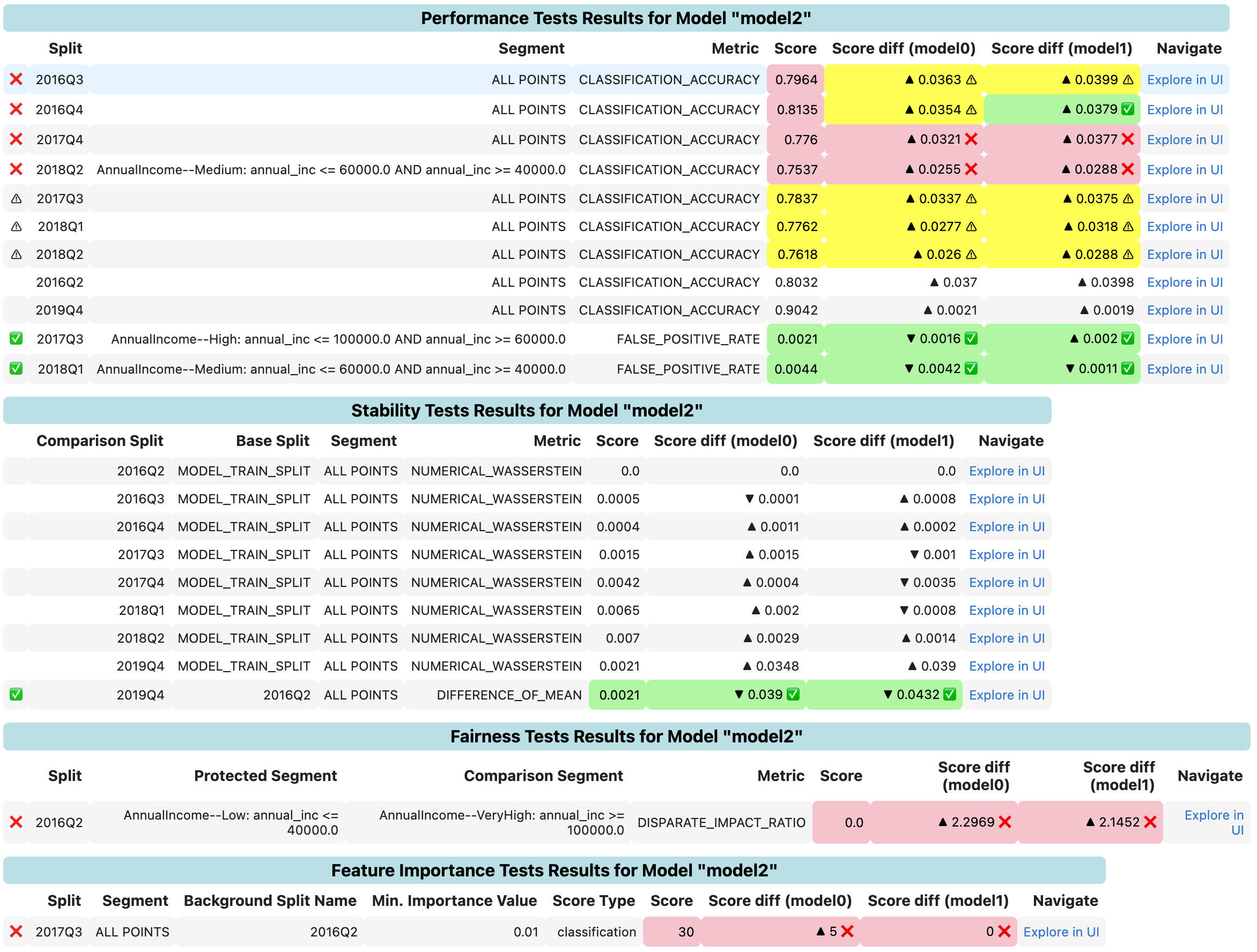

Here's an example of the output of this function in a Jupyter notebook:

- ❌ (red highlight) indicates the test failed according to your user-defined failure criteria

- ⚠️ (yellow highlight) indicates the test threw a warningaccording to your user-defined warning criteria

- ✅ (green highlight) indicates the test passed

- No symbol indicates no applicable warning or failure threshold is defined for the test.

See TruEra's Python SDK Reference for complete details.

Viewing Your Test Leaderboard¶

The summary of test results on all models in the data collection are obtained using get_model_leaderboard (see Python SDK Reference). Calling this method will trigger the necessary computations to obtain test results.

Here's an example of the Jupyter notebook output:

Automated Test Creation¶

After split ingestion, TruEra automates creation of the following performance and fairness tests:

-

Default performance tests check for accuracy (classification project) or MAE (regression project). A warning results if the performance of the model on the split is lower than that in the training split of the model.

-

Default fairness tests project the fairness criteria against the protected segments defined in the project.

Similar to manually defined tests, all tests created automatically by this method are editable using the flag overwrite=True in the corresponding add test method.

This functionality is enabled for the Python SDK by executing the following command:

tru.activate_client_setting('create_model_tests_on_split_ingestion')

Similarly, this functionality is enabled for the CLI by executing this command:

tru activate feature create_model_tests_on_split_ingestion

Click Next below to continue.